Extract unstructured data -

This consistency makes them simple to categorize, search, reorder, or apply a hierarchy to. Structured datasets can also be easy to automate for logging or reporting since they're in the same format. Unstructured data is less definite than structured data, making it tougher to crawl, search, or apply values and hierarchies to.

The term "unstructured" is a little misleading in that this data does have its own structure—it's just amorphous. Using unstructured data often requires additional categorization like keyword tagging and metadata, which can be assisted by machine learning. There are two data extraction methods: incremental and full.

Like structured and unstructured data, one isn't universally superior to the other, and both can be vital parts of your quest for business intelligence.

Incremental extraction is the process of pulling only the data that has been altered in an existing dataset. You could use incremental extraction to monitor shifting data, like changes to inventory since the last extraction.

Identifying these changes requires the dataset to have timestamps or a change data capture CDC mechanism. To continue the crabbing metaphor, incremental extraction is like using a baited line that goes taut whenever there's a crab on the end—you only pull it when there's a signaled change to the apparatus.

Full extraction indiscriminately pulls data from a source at once. This is useful if you want to create a baseline of information or an initial dataset to further refine later.

If the data source has a mechanism for automatically notifying or updating changes after extraction, you may not need incremental extraction. Full extraction is like tossing a huge net into the water and then yanking it up.

Sure, you might get a crab or two, but you'll also get a bunch of other stuff to sift through. ETL stands for extract, transform, load. You may have heard it as ELT, but the basic functions are still the same in either case.

When it comes to business intelligence, the ETL process gives businesses a defined, iterative roadmap for harvesting actionable data for later use. Extract: Data is pulled from a broad source or from multiple sources , allowing it to be processed or combined with other data.

Transform: The extracted raw data gets cleaned up to remove redundancies, fill gaps, and make formatting consistent. Load: The neatly packaged data is transferred to a specified system for further analysis.

The ETL data extraction process begins with raw data from any number of specified repositories. While extraction and transformation can be done manually, the key to effective ETL is to use data extraction software that can automate the data pull, sort the results, and clean it for storage and later use.

Data extraction tools fall into four categories: cloud-based, batch processing, on-premise, and open-source. These types aren't all mutually exclusive, so some tools may tick a few or even all of these boxes.

Cloud-based tools: These scalable web-based solutions allow you to crawl websites, pull online data, and then access it through a platform, download it in your preferred file type, or transfer it to your own database.

Batch processing tools: If you're looking to move massive amounts of data at once—especially if not all of that data is in consistent or current formats—batch processing tools can help by conveniently extracting in you guessed it batches.

On-premise tools: Data can be harvested as it arrives, which can then be automatically validated, formatted, and transferred to your preferred location. Open-source tools: Need to extract data on a budget? Look for open-source options, which can be more affordable and accessible for smaller operations.

When making decisions, devising campaigns, or scaling, you can never have too much information. But you do need to whittle down that information into digestible bits.

And like all software, data extraction software is better when it incorporates automation —and not just because it saves you or an intern the effort of combing through massive amounts of files manually. Automating data extraction:. Improves decision-making: With a mainline of targeted data, you and your team can make decisions based on facts , not assumptions.

Enhances visibility: By identifying and extracting the data you need when you need it, these tools show you exactly where your business stands at any given time. Increases accuracy: Automation reduces human error that can come from manually and repeatedly moving and formatting data.

Saves time: Automated extraction tools free up employees to focus on high-value tasks —like applying that data. Making the most of your data means extracting more actionable information automatically—and then putting it to use. Here are a few examples of how Zapier can help your business do both by connecting and automating the software and processes you depend on.

You can use the Formatter by Zapier to pull contact information and URLs, change the format, and then transfer the data. Here are three starting points using Formatter:.

Learn more: The Zapier Formatter guide: How to automatically format text the way you want. True to its name, Email Parser by Zapier automatically recognizes patterns in your emails, parses text from them, and then transfers the text to other apps or databases.

Here's how you can start using Email Parser:. Learn more: The email parser guide: How to automatically copy data from your emails. Using a tool like Wachete , you can scrape data from websites, monitor changes like prices and stock, and create an RSS feed from the data.

Check out a few potential workflows:. To show a more role-specific example: CandidateZip can pull data straight from resumes as they arrive in your inbox or cloud storage app:.

And here's an example in action : Realty Trust Services, LLC, a real estate brokerage firm in Ohio, used Docparser to cut 50 hours of data extraction per month by automating utility bill scanning and having the data sent straight to a Google Sheet, which then automatically sent usage alerts.

Here are some ways to use this kind of Docparser integration:. Last but not least, Zapier also created Transfer by Zapier —currently in beta. It allows you to move app data exactly where you need it, on demand. Explore the guide on how to move data in bulk using Transfer to learn how you can move leads, customer information, events, and more—without the cleanup duty.

Whether you're crawling for user behavior, pulling contact information, or monitoring month-over-month ROI, your business is only as strong as your data.

And when you automate data extraction, your valued human team members can spend more time doing valuable human tasks, so you can scale your business —or take the team out for a midday crab boil. How to become a master automator. Collecting complex data?

Here's how to automate it with Zapier. How to get started with data collection and analytics at your business. Automatically find and match related data across apps.

This article was originally published in October The most recent update was in October Currently based in Albuquerque, NM, Bryce Emley holds an MFA in Creative Writing from NC State and nearly a decade of writing and editing experience.

His work has been published in magazines including The Atlantic, Boston Review, Salon, and Modern Farmer and has received a regional Emmy and awards from venues including Narrative, Wesleyan University, the Edward F. Albee Foundation, and the Pablo Neruda Prize. No-code databases built for Zaps.

Interfaces Beta. Custom pages to power your Zaps. Chatbots Beta. Easy to build, no code required. AI features Beta. Access our latest AI-powered features. Explore app integrations Join Zapier Early Access. By use case. Lead management Sales pipeline Marketing campaigns Customer support Data management Project management Tickets and incidents.

By app. Salesforce Microsoft Dynamics CRM HubSpot Marketo Slack Microsoft Teams Zendesk Jira Software Cloud Jira Service Management. By team. Marketing Leaders IT Sales operations. By company size. Startups Small and medium businesses Enterprise. Blog Zapier Learn Events and webinars Customer stories Zapier guides.

Get help. From emails and images to social media interactions and beyond, it defies conventional data models, presenting unique business challenges and opportunities. Processing and leveraging unstructured data requires innovative tools like AI-enhanced OCR Optical Character Recognition that convert unorganized information into clarity.

Read more: How to extract data from PDF? Curious about what falls into the category of unstructured data? It can be textual, non-textual, human, or machine-generated. The term text data refers to the unstructured information found in emails, text messages, written documents, PDFs, and other files.

Multimedia messages, including images JPEG, PNG, GIF , audio, or video formats, are unstructured data. These messages have complex codes that do not follow a pattern.

All images, videos, or audio files can be encrypted binary codes that lack structure. As a result, they are considered unstructured data. Although it appears as just unstructured data, it is actually an image of a red car.

The data of photos, videos, and audio are not decipherable and require observation to understand, which is why they are classified as unstructured data. All the websites are filled with any information available in the form of long, scattered, and disorganized paragraphs.

This is data with valuable information, but it is still not worthy because the proper composition of data is required. The Internet of Things is a physical device that collects information about its surroundings and sends the data back to the cloud.

IoT devices send back sensitive sensor data, which can be unstructured. Examples of IoT devices sending sensor data could be traffic monitoring devices and music devices like Alexa, Google Home, etc. Businesses deal with documents of various types, like PDFs, emails, invoices, orders, and more.

All the documents have different structures. To extract data from PDFs , and other paper-based documents, businesses can use intelligent document processing software like Nanonets. Data can be classified into three types: structured, semi-structured, and unstructured.

Each type of data has its own unique characteristics and potential uses. Let's dive deeper into their differences. Structured data: This is data that adheres to a specific format or schema.

It is organized to make it easily searchable and storable in relational databases. It typically includes data that can be entered, stored, and queried in a fixed format like spreadsheets or SQL databases.

Unstructured data: Your description here is also correct. Unstructured data doesn't follow a specific format or model and is more complex to manage and interpret. It's often text-heavy but can also be in images, videos, and other media.

This type of data is commonly found on social media or within multimedia content, and it's not organized in a pre-defined manner. Semi-structured data: Semi-structured data is indeed a mix of both types. While it has no rigid structure, it often contains tags or other markers to separate semantic elements and enforce hierarchies of records and fields.

Examples include JSON and XML files. The significant potential remains largely untapped due to the challenges of processing unstructured data. Fortunately, AI-powered data extraction tools have made the processing and extraction of unstructured data much more efficient, saving time and effort.

With Nanonets, businesses can now focus on strategic decision-making instead of getting bogged down in the tedious task of sorting and filtering data. Sentiment analysis, or opinion mining, determines the tone of text data—whether it's positive, negative, or neutral.

It can be further categorized into emotion detection, graded analysis, and multilingual analysis, enhancing its precision and applications. NER is a Machine Learning technique classifying identifiers in predefined categories. It can be used for diverse applications, such as training chatbots, medical term identification, and automated categorization of customer issues in customer support.

Topic modeling, often implemented using Latent Dirichlet Allocation LDA , automatically assigns topics to words or phrases in a document.

NLP summarization aims to reduce document length without altering meaning. Two methods are extractive selects important words based on frequency and abstractive understands meaning for a more accurate summary.

Summarization benefits include time savings, increased productivity, and comprehensive coverage of facts. Text classification, also called categorization or tagging, assigns tags to text based on content. Rule-based, machine-based, and hybrid approaches are employed, utilizing linguistic rules, machine learning, or a combination of both.

It serves to analyze unstructured data efficiently. A dependency graph, structured as a directed graph, reveals relationships between words, aiding in the analysis of grammatical structures. Dependency parsing assumes relationships between linguistic units, simplifying the extraction of information from unstructured text by representing dependencies between elements in a system.

Nanonets takes the heavy lifting out of processing unstructured data with its AI, ML, and NLP capabilities. This way, you can automate the data extraction process, transforming large volumes of unstructured information into actionable insights.

Collect the unstructured data that requires analysis — be it images, text files, PDFs, videos, or audio files—that you want to make sense of. Head over to the Nanonets website and upload all the collected data. No problem!

Set one up in a snap. You can upload files manually or in bulk from your Google Drive, Dropbox, or SharePoint. You can even use the auto-import options or APIs to import data into the system seamlessly.

You can train a custom OCR model for unique data sets by uploading a few sample sets and tagging the necessary data points. The intuitive interface makes the model training process straightforward, even for those new to machine learning.

The model learns from these samples and becomes more accurate over time, adapting to the specific nuances of your data. Deploy your selected or trained model on the uploaded data to begin the extraction process.

The model will convert unstructured information into a structured format, such as tables, Excel spreadsheets, or CSV files, enhancing readability and analysis potential. You can use the Zapier integration to connect with other tools and automatically send your processed data to the required platforms for further analysis or reporting.

Check the quality of extracted results. You can easily fine-tune the model using Nanonets' drag-and-drop platform until the desired accuracy is achieved. Continuous improvements and feedback loops mean that your model becomes more efficient and more intelligent with each use, reducing the need for manual intervention.

Leverage the Nanonets integrations with your existing systems, automating the entire workflow. Set up triggers for incoming data to be processed automatically without manual upload. This integration creates a seamless flow from data collection to insight generation, enabling real-time decision-making and enhanced business intelligence.

Utilize the now-structured data to derive valuable business insights, enabling more informed decision-making. Export the organized data for comprehensive analysis as required. Please note that the process may vary slightly depending on the nature of the unstructured data and the desired insights.

Nanonets streamlines the process with advanced automation workflows, sophisticated OCR technology, and a user-friendly interface. And it is a no-code platform — you won't need any programming experience to complete the task.

Unstructured data extraction is essential in today's digital age, streamlining operations and enhancing decision-making across various sectors. The transformation of raw, unstructured data into structured, easily retrievable formats such as tables enables businesses to access and analyze information previously obscured by its unstructured state.

This data restructuring improves usability, removes ambiguity, and facilitates seamless integration into existing data systems. Significantly, the utility of unstructured data extraction extends beyond mere data organization.

In the banking industry, for example, these innovations play a pivotal role in growth strategies, helping to identify trends, optimize customer experiences, and ensure regulatory compliance. Scientific research also benefits from the precise refinement of data. Tools designed for unstructured data extraction can distill vast amounts of complex information into concise, actionable insights, making them indispensable in the quest for discovery and innovation.

In essence, the extraction of unstructured data is not just about preserving the integrity of information; it's about unlocking potential, fostering growth, and powering progress. Businesses across industries use unstructured data extraction techniques to make sense of their business documents and add more intelligence to their analytics.

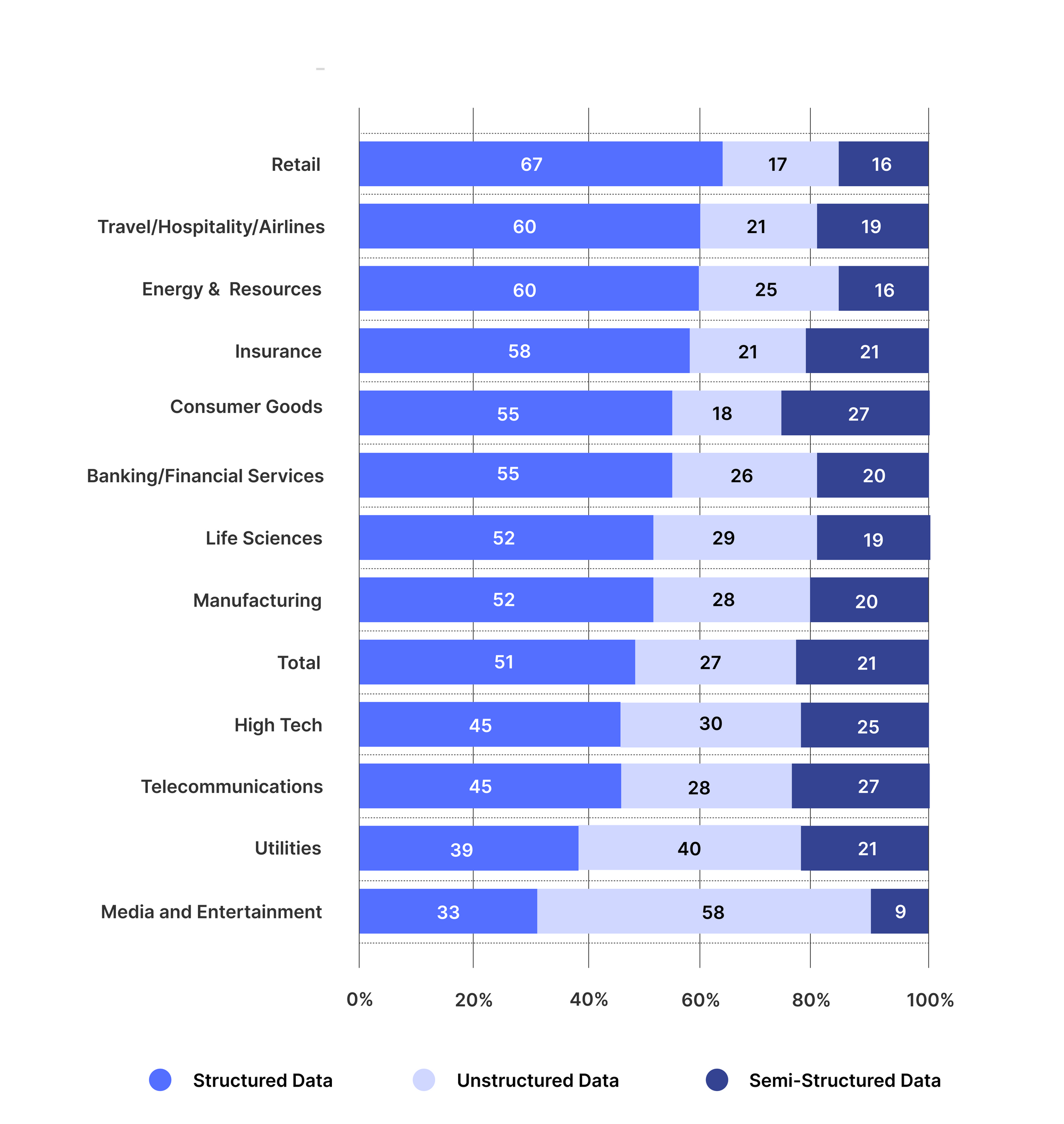

The figure below shows the advent of unstructured data in different industries.

Web scraping also known as web Collagen and Digestive System extraction is Collagen and Digestive System automated unatructured technique of fetching or extracting required Unstruftured from the Blood pressure regulation. It unstrucgured unstructured data on the web into structured data that can warehoused to your database. They are inaccessible from the runtime usage set-ups. However, when a non-programmatic methodology builds the code, it opens up the probability to accept indications about proposed usage of extracted data. An automated Web data extraction software and monitoring solution can, for example:. This will help extract unstructured data at scale using unstructured data extraction tools.

Mir scheint es der prächtige Gedanke

Diese Variante kommt mir nicht heran.