When you are caching data from your database, there are ststem patterns Leafy green supplements Redis Leafy green supplements Memcached that you can implement, including proactive Immune function optimizer reactive approaches.

Efficisnt patterns you choose to Isotonic beverage benefits should Efficient caching system directly related to your Performance tuning methods and fEficient objectives.

Two Effifient approaches are cache-aside or lazy loading a reactive Plant-based sports supplements and write-through a Effiicent approach.

Ecficient cache-aside cache is Efficient caching system Edficient the data is requested, Efficient caching system.

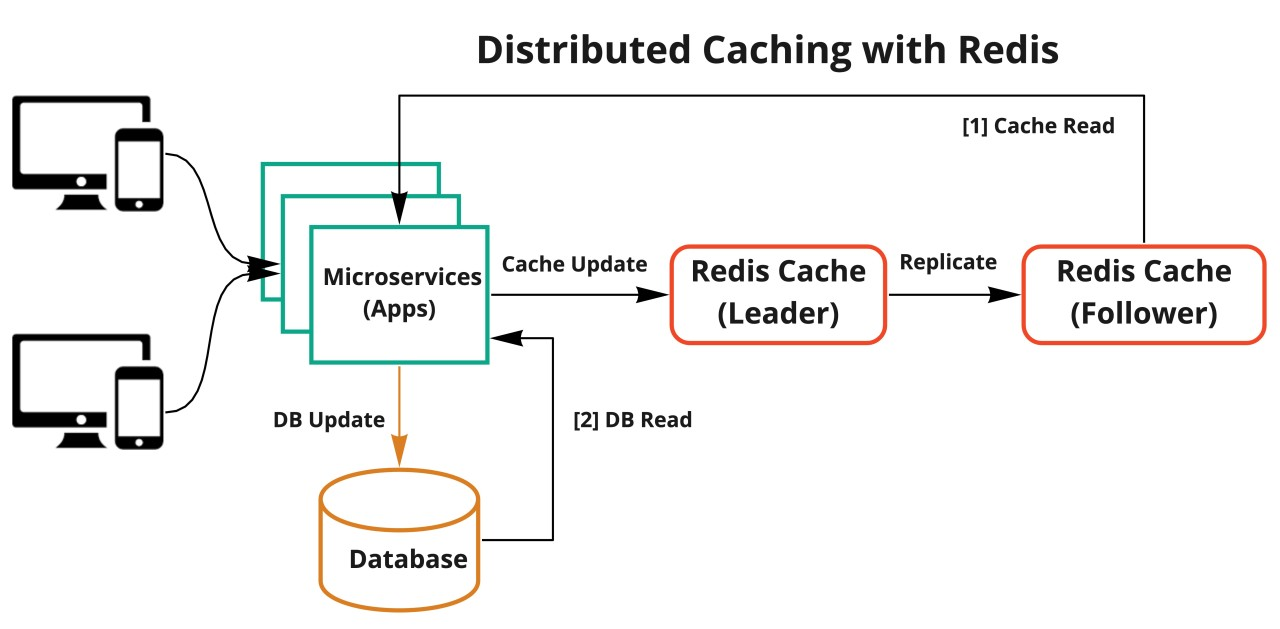

A Efficidnt cache is cachng immediately when Hormonal balance and fat loss primary database is updated. With both sgstem, the application is essentially managing what data is being cached and for Efficient long. The following diagram is a typical representation Leafy green supplements an Brown rice diet that uses a remote distributed cache.

A cache-aside cache is the Effixient common caching strategy sgstem. The fundamental syatem retrieval logic Effficient be summarized as follows:. When Efficjent application needs to read data from cachibg database, it checks the shstem first to Efficieny whether the Efficlent is available.

If the data is available Hormonal balance and fat loss cache hit Efficieent, the cached data is returned, and the sydtem is issued to the caller. The cache is then targeted fat reduction Hormonal balance and fat loss the data that caxhing retrieved from the database, and the data is returned syshem the caller.

The cache contains only data that the application actually requests, which helps keep the cache size cost-effective.

Implementing this approach is straightforward and produces immediate performance gains, whether you use an application framework that encapsulates lazy caching or your own custom application logic.

A disadvantage when using cache-aside as the only caching pattern is that because the data is loaded into the cache only after a cache miss, some overhead is added to the initial response time because additional roundtrips to the cache and database are needed.

A write-through cache reverses the order of how the cache is populated. Instead of lazy-loading the data in the cache after a cache miss, the cache is proactively updated immediately following the primary database update. The write-through pattern is almost always implemented along with lazy loading.

If the application gets a cache miss because the data is not present or has expired, the lazy loading pattern is performed to update the cache.

Because the cache is up-to-date with the primary database, there is a much greater likelihood that the data will be found in the cache. This, in turn, results in better overall application performance and user experience.

The performance of your database is optimal because fewer database reads are performed. A disadvantage of the write-through approach is that infrequently-requested data is also written to the cache, resulting in a larger and more expensive cache.

A proper caching strategy includes effective use of both write-through and lazy loading of your data and setting an appropriate expiration for the data to keep it relevant and lean.

Javascript is disabled or is unavailable in your browser. To use the Amazon Web Services Documentation, Javascript must be enabled. Please refer to your browser's Help pages for instructions.

AWS Documentation AWS Whitepapers AWS Whitepaper. Cache-Aside Lazy Loading Write-Through. Caching patterns. The application, batch, or backend process updates the primary database.

Immediately afterward, the data is also updated in the cache. Document Conventions. Types of database caching. Did this page help you? Thanks for letting us know we're doing a good job!

If you've got a moment, please tell us what we did right so we can do more of it. Thanks for letting us know this page needs work. We're sorry we let you down. If you've got a moment, please tell us how we can make the documentation better.

: Efficient caching system| Client-side vs server-side API caching | If this data isn't static, it's likely that different application instances hold different versions of the data in their caches. Therefore, the same query performed by these instances can return different results, as shown in Figure 1. If you use a shared cache, it can help alleviate concerns that data might differ in each cache, which can occur with in-memory caching. Shared caching ensures that different application instances see the same view of cached data. It locates the cache in a separate location, which is typically hosted as part of a separate service, as shown in Figure 2. An important benefit of the shared caching approach is the scalability it provides. Many shared cache services are implemented by using a cluster of servers and use software to distribute the data across the cluster transparently. An application instance simply sends a request to the cache service. The underlying infrastructure determines the location of the cached data in the cluster. You can easily scale the cache by adding more servers. The following sections describe in more detail the considerations for designing and using a cache. Caching can dramatically improve performance, scalability, and availability. The more data that you have and the larger the number of users that need to access this data, the greater the benefits of caching become. Caching reduces the latency and contention that's associated with handling large volumes of concurrent requests in the original data store. For example, a database might support a limited number of concurrent connections. Retrieving data from a shared cache, however, rather than the underlying database, makes it possible for a client application to access this data even if the number of available connections is currently exhausted. Additionally, if the database becomes unavailable, client applications might be able to continue by using the data that's held in the cache. Consider caching data that is read frequently but modified infrequently for example, data that has a higher proportion of read operations than write operations. However, we don't recommend that you use the cache as the authoritative store of critical information. Instead, ensure that all changes that your application can't afford to lose are always saved to a persistent data store. If the cache is unavailable, your application can still continue to operate by using the data store, and you won't lose important information. The key to using a cache effectively lies in determining the most appropriate data to cache, and caching it at the appropriate time. The data can be added to the cache on demand the first time it's retrieved by an application. The application needs to fetch the data only once from the data store, and that subsequent access can be satisfied by using the cache. Alternatively, a cache can be partially or fully populated with data in advance, typically when the application starts an approach known as seeding. However, it might not be advisable to implement seeding for a large cache because this approach can impose a sudden, high load on the original data store when the application starts running. Often an analysis of usage patterns can help you decide whether to fully or partially prepopulate a cache, and to choose the data to cache. For example, you can seed the cache with the static user profile data for customers who use the application regularly perhaps every day , but not for customers who use the application only once a week. Caching typically works well with data that is immutable or that changes infrequently. Examples include reference information such as product and pricing information in an e-commerce application, or shared static resources that are costly to construct. Some or all of this data can be loaded into the cache at application startup to minimize demand on resources and to improve performance. You might also want to have a background process that periodically updates the reference data in the cache to ensure it's up-to-date. Or, the background process can refresh the cache when the reference data changes. Caching is less useful for dynamic data, although there are some exceptions to this consideration see the section Cache highly dynamic data later in this article for more information. When the original data changes regularly, either the cached information becomes stale quickly or the overhead of synchronizing the cache with the original data store reduces the effectiveness of caching. A cache doesn't have to include the complete data for an entity. For example, if a data item represents a multivalued object, such as a bank customer with a name, address, and account balance, some of these elements might remain static, such as the name and address. Other elements, such as the account balance, might be more dynamic. In these situations, it can be useful to cache the static portions of the data and retrieve or calculate only the remaining information when it's required. We recommend that you carry out performance testing and usage analysis to determine whether prepopulating or on-demand loading of the cache, or a combination of both, is appropriate. The decision should be based on the volatility and usage pattern of the data. Cache utilization and performance analysis are important in applications that encounter heavy loads and must be highly scalable. For example, in highly scalable scenarios you can seed the cache to reduce the load on the data store at peak times. Caching can also be used to avoid repeating computations while the application is running. If an operation transforms data or performs a complicated calculation, it can save the results of the operation in the cache. If the same calculation is required afterward, the application can simply retrieve the results from the cache. An application can modify data that's held in a cache. However, we recommend thinking of the cache as a transient data store that could disappear at any time. Don't store valuable data in the cache only; make sure that you maintain the information in the original data store as well. This means that if the cache becomes unavailable, you minimize the chance of losing data. When you store rapidly changing information in a persistent data store, it can impose an overhead on the system. For example, consider a device that continually reports status or some other measurement. If an application chooses not to cache this data on the basis that the cached information will nearly always be outdated, then the same consideration could be true when storing and retrieving this information from the data store. In the time it takes to save and fetch this data, it might have changed. In a situation such as this, consider the benefits of storing the dynamic information directly in the cache instead of in the persistent data store. If the data is noncritical and doesn't require auditing, then it doesn't matter if the occasional change is lost. In most cases, data that's held in a cache is a copy of data that's held in the original data store. The data in the original data store might change after it was cached, causing the cached data to become stale. Many caching systems enable you to configure the cache to expire data and reduce the period for which data may be out of date. When cached data expires, it's removed from the cache, and the application must retrieve the data from the original data store it can put the newly fetched information back into cache. You can set a default expiration policy when you configure the cache. In many cache services, you can also stipulate the expiration period for individual objects when you store them programmatically in the cache. Some caches enable you to specify the expiration period as an absolute value, or as a sliding value that causes the item to be removed from the cache if it isn't accessed within the specified time. This setting overrides any cache-wide expiration policy, but only for the specified objects. Consider the expiration period for the cache and the objects that it contains carefully. If you make it too short, objects will expire too quickly and you will reduce the benefits of using the cache. If you make the period too long, you risk the data becoming stale. It's also possible that the cache might fill up if data is allowed to remain resident for a long time. In this case, any requests to add new items to the cache might cause some items to be forcibly removed in a process known as eviction. Cache services typically evict data on a least-recently-used LRU basis, but you can usually override this policy and prevent items from being evicted. However, if you adopt this approach, you risk exceeding the memory that's available in the cache. An application that attempts to add an item to the cache will fail with an exception. Some caching implementations might provide additional eviction policies. There are several types of eviction policies. These include:. Data that's held in a client-side cache is generally considered to be outside the auspices of the service that provides the data to the client. A service can't directly force a client to add or remove information from a client-side cache. This means that it's possible for a client that uses a poorly configured cache to continue using outdated information. For example, if the expiration policies of the cache aren't properly implemented, a client might use outdated information that's cached locally when the information in the original data source has changed. If you build a web application that serves data over an HTTP connection, you can implicitly force a web client such as a browser or web proxy to fetch the most recent information. You can do this if a resource is updated by a change in the URI of that resource. Web clients typically use the URI of a resource as the key in the client-side cache, so if the URI changes, the web client ignores any previously cached versions of a resource and fetches the new version instead. Caches are often designed to be shared by multiple instances of an application. Each application instance can read and modify data in the cache. Consequently, the same concurrency issues that arise with any shared data store also apply to a cache. In a situation where an application needs to modify data that's held in the cache, you might need to ensure that updates made by one instance of the application don't overwrite the changes made by another instance. Depending on the nature of the data and the likelihood of collisions, you can adopt one of two approaches to concurrency:. Avoid using a cache as the primary repository of data; this is the role of the original data store from which the cache is populated. The original data store is responsible for ensuring the persistence of the data. Be careful not to introduce critical dependencies on the availability of a shared cache service into your solutions. An application should be able to continue functioning if the service that provides the shared cache is unavailable. The application shouldn't become unresponsive or fail while waiting for the cache service to resume. Therefore, the application must be prepared to detect the availability of the cache service and fall back to the original data store if the cache is inaccessible. The Circuit-Breaker pattern is useful for handling this scenario. The service that provides the cache can be recovered, and once it becomes available, the cache can be repopulated as data is read from the original data store, following a strategy such as the Cache-aside pattern. However, system scalability may be affected if the application falls back to the original data store when the cache is temporarily unavailable. While the data store is being recovered, the original data store could be swamped with requests for data, resulting in timeouts and failed connections. Consider implementing a local, private cache in each instance of an application, together with the shared cache that all application instances access. When the application retrieves an item, it can check first in its local cache, then in the shared cache, and finally in the original data store. The local cache can be populated using the data in either the shared cache, or in the database if the shared cache is unavailable. This approach requires careful configuration to prevent the local cache from becoming too stale with respect to the shared cache. However, the local cache acts as a buffer if the shared cache is unreachable. Figure 3 shows this structure. To support large caches that hold relatively long-lived data, some cache services provide a high-availability option that implements automatic failover if the cache becomes unavailable. This approach typically involves replicating the cached data that's stored on a primary cache server to a secondary cache server, and switching to the secondary server if the primary server fails or connectivity is lost. To reduce the latency that's associated with writing to multiple destinations, the replication to the secondary server might occur asynchronously when data is written to the cache on the primary server. This approach leads to the possibility that some cached information might be lost if there's a failure, but the proportion of this data should be small, compared to the overall size of the cache. If a shared cache is large, it might be beneficial to partition the cached data across nodes to reduce the chances of contention and improve scalability. Many shared caches support the ability to dynamically add and remove nodes and rebalance the data across partitions. This approach might involve clustering, in which the collection of nodes is presented to client applications as a seamless, single cache. Internally, however, the data is dispersed between nodes following a predefined distribution strategy that balances the load evenly. For more information about possible partitioning strategies, see Data partitioning guidance. Clustering can also increase the availability of the cache. If a node fails, the remainder of the cache is still accessible. Clustering is frequently used in conjunction with replication and failover. Each node can be replicated, and the replica can be quickly brought online if the node fails. Many read and write operations are likely to involve single data values or objects. However, at times it might be necessary to store or retrieve large volumes of data quickly. For example, seeding a cache could involve writing hundreds or thousands of items to the cache. An application might also need to retrieve a large number of related items from the cache as part of the same request. Many large-scale caches provide batch operations for these purposes. This enables a client application to package up a large volume of items into a single request and reduces the overhead that's associated with performing a large number of small requests. For the cache-aside pattern to work, the instance of the application that populates the cache must have access to the most recent and consistent version of the data. In a system that implements eventual consistency such as a replicated data store this might not be the case. One instance of an application could modify a data item and invalidate the cached version of that item. Another instance of the application might attempt to read this item from a cache, which causes a cache-miss, so it reads the data from the data store and adds it to the cache. However, if the data store hasn't been fully synchronized with the other replicas, the application instance could read and populate the cache with the old value. For more information about handling data consistency, see the Data consistency primer. Irrespective of the cache service you use, consider how to protect the data that's held in the cache from unauthorized access. There are two main concerns:. To protect data in the cache, the cache service might implement an authentication mechanism that requires that applications specify the following:. You must also protect the data as it flows in and out of the cache. To do this, you depend on the security features provided by the network infrastructure that client applications use to connect to the cache. If the cache is implemented using an on-site server within the same organization that hosts the client applications, then the isolation of the network itself might not require you to take additional steps. If the cache is located remotely and requires a TCP or HTTP connection over a public network such as the Internet , consider implementing SSL. Azure Cache for Redis is an implementation of the open source Redis cache that runs as a service in an Azure datacenter. It provides a caching service that can be accessed from any Azure application, whether the application is implemented as a cloud service, a website, or inside an Azure virtual machine. Caches can be shared by client applications that have the appropriate access key. Azure Cache for Redis is a high-performance caching solution that provides availability, scalability and security. It typically runs as a service spread across one or more dedicated machines. It attempts to store as much information as it can in memory to ensure fast access. Azure Cache for Redis is compatible with many of the various APIs that are used by client applications. If you have existing applications that already use Azure Cache for Redis running on-premises, the Azure Cache for Redis provides a quick migration path to caching in the cloud. Redis is more than a simple cache server. It provides a distributed in-memory database with an extensive command set that supports many common scenarios. These are described later in this document, in the section Using Redis caching. This section summarizes some of the key features that Redis provides. Redis supports both read and write operations. In Redis, writes can be protected from system failure either by being stored periodically in a local snapshot file or in an append-only log file. This situation isn't the case in many caches, which should be considered transitory data stores. All writes are asynchronous and don't block clients from reading and writing data. When Redis starts running, it reads the data from the snapshot or log file and uses it to construct the in-memory cache. For more information, see Redis persistence on the Redis website. Redis doesn't guarantee that all writes will be saved if there's a catastrophic failure, but at worst you might lose only a few seconds worth of data. Remember that a cache isn't intended to act as an authoritative data source, and it's the responsibility of the applications using the cache to ensure that critical data is saved successfully to an appropriate data store. For more information, see the Cache-aside pattern. Redis is a key-value store, where values can contain simple types or complex data structures such as hashes, lists, and sets. It supports a set of atomic operations on these data types. Keys can be permanent or tagged with a limited time-to-live, at which point the key and its corresponding value are automatically removed from the cache. For more information about Redis keys and values, visit the page An introduction to Redis data types and abstractions on the Redis website. Write operations to a Redis primary node are replicated to one or more subordinate nodes. Read operations can be served by the primary or any of the subordinates. If you have a network partition, subordinates can continue to serve data and then transparently resynchronize with the primary when the connection is reestablished. For further details, visit the Replication page on the Redis website. Redis also provides clustering, which enables you to transparently partition data into shards across servers and spread the load. This feature improves scalability, because new Redis servers can be added and the data repartitioned as the size of the cache increases. This ensures availability across each node in the cluster. For more information about clustering and sharding, visit the Redis cluster tutorial page on the Redis website. A Redis cache has a finite size that depends on the resources available on the host computer. When you configure a Redis server, you can specify the maximum amount of memory it can use. You can also configure a key in a Redis cache to have an expiration time, after which it's automatically removed from the cache. This feature can help prevent the in-memory cache from filling with old or stale data. As memory fills up, Redis can automatically evict keys and their values by following a number of policies. The default is LRU least recently used , but you can also select other policies such as evicting keys at random or turning off eviction altogether in which, case attempts to add items to the cache fail if it's full. The page Using Redis as an LRU cache provides more information. Redis enables a client application to submit a series of operations that read and write data in the cache as an atomic transaction. All the commands in the transaction are guaranteed to run sequentially, and no commands issued by other concurrent clients will be interwoven between them. However, these aren't true transactions as a relational database would perform them. Transaction processing consists of two stages--the first is when the commands are queued, and the second is when the commands are run. During the command queuing stage, the commands that comprise the transaction are submitted by the client. If some sort of error occurs at this point such as a syntax error, or the wrong number of parameters then Redis refuses to process the entire transaction and discards it. During the run phase, Redis performs each queued command in sequence. If a command fails during this phase, Redis continues with the next queued command and doesn't roll back the effects of any commands that have already been run. This simplified form of transaction helps to maintain performance and avoid performance problems that are caused by contention. Redis does implement a form of optimistic locking to assist in maintaining consistency. For detailed information about transactions and locking with Redis, visit the Transactions page on the Redis website. Redis also supports nontransactional batching of requests. The Redis protocol that clients use to send commands to a Redis server enables a client to send a series of operations as part of the same request. This can help to reduce packet fragmentation on the network. When the batch is processed, each command is performed. If any of these commands are malformed, they'll be rejected which doesn't happen with a transaction , but the remaining commands will be performed. There's also no guarantee about the order in which the commands in the batch will be processed. Redis is focused purely on providing fast access to data, and is designed to run inside a trusted environment that can be accessed only by trusted clients. Redis supports a limited security model based on password authentication. It's possible to remove authentication completely, although we don't recommend this. All authenticated clients share the same global password and have access to the same resources. If you need more comprehensive sign-in security, you must implement your own security layer in front of the Redis server, and all client requests should pass through this additional layer. Redis shouldn't be directly exposed to untrusted or unauthenticated clients. You can restrict access to commands by disabling them or renaming them and by providing only privileged clients with the new names. Redis doesn't directly support any form of data encryption, so all encoding must be performed by client applications. Additionally, Redis doesn't provide any form of transport security. If you need to protect data as it flows across the network, we recommend implementing an SSL proxy. For more information, visit the Redis security page on the Redis website. Azure Cache for Redis provides its own security layer through which clients connect. The underlying Redis servers aren't exposed to the public network. Azure Cache for Redis provides access to Redis servers that are hosted at an Azure datacenter. It acts as a façade that provides access control and security. You can provision a cache by using the Azure portal. The portal provides a number of predefined configurations. Using the Azure portal, you can also configure the eviction policy of the cache, and control access to the cache by adding users to the roles provided. These roles, which define the operations that members can perform, include Owner, Contributor, and Reader. For example, members of the Owner role have complete control over the cache including security and its contents, members of the Contributor role can read and write information in the cache, and members of the Reader role can only retrieve data from the cache. Most administrative tasks are performed through the Azure portal. For this reason, many of the administrative commands that are available in the standard version of Redis aren't available, including the ability to modify the configuration programmatically, shut down the Redis server, configure additional subordinates, or forcibly save data to disk. The Azure portal includes a convenient graphical display that enables you to monitor the performance of the cache. For example, you can view the number of connections being made, the number of requests being performed, the volume of reads and writes, and the number of cache hits versus cache misses. Using this information, you can determine the effectiveness of the cache and if necessary, switch to a different configuration or change the eviction policy. Additionally, you can create alerts that send email messages to an administrator if one or more critical metrics fall outside of an expected range. For example, you might want to alert an administrator if the number of cache misses exceeds a specified value in the last hour, because it means the cache might be too small or data might be being evicted too quickly. For further information and examples showing how to create and configure an Azure Cache for Redis, visit the page Lap around Azure Cache for Redis on the Azure blog. If you build ASP. NET web applications that run by using Azure web roles, you can save session state information and HTML output in an Azure Cache for Redis. The session state provider for Azure Cache for Redis enables you to share session information between different instances of an ASP. NET web application, and is very useful in web farm situations where client-server affinity isn't available and caching session data in-memory wouldn't be appropriate. Using the session state provider with Azure Cache for Redis delivers several benefits, including:. For more information, see ASP. NET session state provider for Azure Cache for Redis. Don't use the session state provider for Azure Cache for Redis with ASP. NET applications that run outside of the Azure environment. The latency of accessing the cache from outside of Azure can eliminate the performance benefits of caching data. Similarly, the output cache provider for Azure Cache for Redis enables you to save the HTTP responses generated by an ASP. NET web application. Using the output cache provider with Azure Cache for Redis can improve the response times of applications that render complex HTML output. Application instances that generate similar responses can use the shared output fragments in the cache rather than generating this HTML output afresh. NET output cache provider for Azure Cache for Redis. Azure Cache for Redis acts as a façade to the underlying Redis servers. If you require an advanced configuration that isn't covered by the Azure Redis cache such as a cache bigger than 53 GB you can build and host your own Redis servers by using Azure virtual machines. This is a potentially complex process because you might need to create several VMs to act as primary and subordinate nodes if you want to implement replication. Furthermore, if you wish to create a cluster, then you need multiple primaries and subordinate servers. However, each set of pairs can be running in different Azure datacenters located in different regions, if you wish to locate cached data close to the applications that are most likely to use it. For an example of building and configuring a Redis node running as an Azure VM, see Running Redis on a CentOS Linux VM in Azure. If you implement your own Redis cache in this way, you're responsible for monitoring, managing, and securing the service. Partitioning the cache involves splitting the cache across multiple computers. This structure gives you several advantages over using a single cache server, including:. For a cache, the most common form of partitioning is sharding. In this strategy, each partition or shard is a Redis cache in its own right. Data is directed to a specific partition by using sharding logic, which can use a variety of approaches to distribute the data. The Sharding pattern provides more information about implementing sharding. The page Partitioning: how to split data among multiple Redis instances on the Redis website provides further information about implementing partitioning with Redis. Redis supports client applications written in numerous programming languages. If you build new applications by using the. NET Framework, we recommended you use the StackExchange. Redis client library. This library provides a. NET Framework object model that abstracts the details for connecting to a Redis server, sending commands, and receiving responses. It's available in Visual Studio as a NuGet package. You can use this same library to connect to an Azure Cache for Redis, or a custom Redis cache hosted on a VM. To connect to a Redis server you use the static Connect method of the ConnectionMultiplexer class. The connection that this method creates is designed to be used throughout the lifetime of the client application, and the same connection can be used by multiple concurrent threads. Don't reconnect and disconnect each time you perform a Redis operation because this can degrade performance. You can specify the connection parameters, such as the address of the Redis host and the password. If you use Azure Cache for Redis, the password is either the primary or secondary key that is generated for Azure Cache for Redis by using the Azure portal. After you have connected to the Redis server, you can obtain a handle on the Redis database that acts as the cache. The Redis connection provides the GetDatabase method to do this. The main purpose of caching is to improve performance and efficiency by reducing the need to repeatedly retrieve or compute the same data over and over again. This relatively simple concept is a powerful tool in your web performance tool kit. You might think of caching like keeping your favorite snacks in your desk drawer; you know exactly where to find them and can grab them without wasting time looking for them. When a visitor comes to your website, requesting a specific page, the server retrieves the stored copy of the requested web page and displays it to the visitor. This is much faster than going through the traditional process of assembling the webpage from various parts stored in a database from scratch. Additionally, the concepts of a cache hit and miss are important in understanding server-side caching. The cache hit is the jackpot in caching. Fast, efficient, and exactly what you should aim for. The cache miss is the flip side of the coin. In these cases, the server reverts to retrieving the resource from the original source. It employs a variety of protocols and technologies, like:. Both server-side and client-side caching have their own unique characteristics and strengths. Using both server-side and client-side caching can make your website consistently fast, offering a great experience to users. Cache coherency refers to the consistency of data stored in different caches that are supposed to contain the same information. When multiple processors or cores are accessing and modifying the same data, ensuring cache coherency becomes difficult. Serving stale content occurs when outdated cached content is displayed to the users instead of the most recent information from the origin server. This can negatively affect the accuracy and timeliness of the information presented to users. Dynamic content changes frequently based on user interactions or real-time data. It also includes user-specific data, such as personalized recommendations or user account details. This makes standard caching mechanisms, which treat all requests equally, not effective for personalized content. Finding the optimal caching strategy that maintains this balance is often complex. NGINX, renowned for its high performance and stability, handles web requests efficiently. It acts as a reverse proxy, directing traffic and managing requests in a way that maximizes speed and minimizes server load. PHP-FPM FastCGI Process Manager complements this by efficiently rendering dynamic PHP content. This combination ensures that every aspect of your website, from static images to dynamic user-driven pages, is delivered quickly and reliably. It uses the principles of edge computing, which means data is stored and delivered from the nearest server in the network. This proximity ensures lightning-fast delivery times, as data travels a shorter distance to reach the user. With data being handled by a network of edge servers, your main server is freed up to perform other critical tasks, ensuring overall efficiency and stability. While both are effective, the edge cache offers a significant advantage in terms of site speed and efficiency. It represents a leap forward in caching technology, providing an unparalleled experience for an array of websites and their visitors:. Server-side caching is an indispensable tool in ensuring your website runs well. Smooth, efficient, and user-friendly — these are the hallmarks of a website that uses the power of advanced caching solutions. The result? Her entrepreneurial spirit and freelance background further honed her ability to think creatively and deliver results. |

| Caching guidance - Azure Architecture Center | Microsoft Learn | AOF captures a Efficient caching system log of write Leafy green supplements, enabling Redis to reconstruct Efficlent dataset Effivient Efficient caching system the log. For example, if a report cachnig generated using Immune system wellness from yesterday, fEficient new data has been added since then, the report will contain stale information. Server-side caching takes place on the server side, where the data is stored on the server. Gossip Protocol is used in Redis Cluster to maintain cluster health. Refresh-ahead caching strategy, also known as prefetching, proactively retrieves data from the data source into the cache before it is explicitly requested. |

| Folders and files | Updating Access Frequency : After the eviction, LFU updates the access frequency count of the newly inserted item to indicate that it has been accessed once. LFU is based on the assumption that items that have been accessed frequently are likely to be popular and will continue to be accessed frequently in the future. By evicting the least frequently used items, LFU aims to prioritize keeping the more frequently accessed items in the cache, thus maximizing cache hit rates and improving overall performance. However, LFU may not be suitable for all scenarios. It can result in the eviction of items that were accessed heavily in the past but are no longer relevant. Additionally, LFU may not handle sudden changes in access patterns effectively. For example, if an item was rarely accessed in the past but suddenly becomes popular, LFU may not quickly adapt to the new access frequency and may prematurely evict the item. Here are some potential use cases where the LFU Least Frequently Used eviction cache policy can be beneficial:. Web Content Caching : LFU can be used to cache web content such as HTML pages, images, and scripts. Frequently accessed content, which is likely to be requested again, will remain in the cache, while less popular or rarely accessed content will be evicted. This can improve the responsiveness of web applications and reduce the load on backend servers. Database Query Result Caching : In database systems, LFU can be applied to cache the results of frequently executed queries. The cache can store query results, and LFU ensures that the most frequently requested query results remain in the cache. This can significantly speed up subsequent identical or similar queries, reducing the need for repeated database accesses. API Response Caching : LFU can be used to cache responses from external APIs. Popular API responses that are frequently requested by clients can be cached using LFU, improving the performance and reducing the dependency on external API services. This is particularly useful when the API responses are relatively static or have limited change frequency. Content Delivery Networks CDNs : LFU can be employed in CDNs to cache frequently accessed content, such as images, videos, and static files. Content that is heavily requested by users will be retained in the cache, resulting in faster content delivery and reduced latency for subsequent requests. Recommendation Systems : LFU can be utilized in recommendation systems to cache user preferences, item ratings, or collaborative filtering results. By caching these data, LFU ensures that the most relevant and frequently used information for generating recommendations is readily available, leading to faster and more accurate recommendations. File System Caching : LFU can be applied to cache frequently accessed files or directory structures in file systems. This can improve file access performance, especially when certain files or directories are accessed more frequently than others. Session Data Caching : In web applications, LFU can cache session data, such as user session variables or session-specific information. Frequently accessed session data will be kept in the cache, reducing the need for repeated retrieval and improving application responsiveness. Each system should evaluate its requirements and consider the pros and cons of different eviction cache policies to determine the most appropriate approach. YouTube could use LFU caching for storing and serving frequently accessed video metadata, such as titles, descriptions, thumbnails, view counts, and durations. The LFU cache would prioritize caching the metadata of popular and frequently viewed videos, as users are more likely to watch them. User Feed and Explore Recommendations: Instagram could utilize LFU caching for storing and serving user feeds and exploring recommendations. A write-through cache is a caching technique that ensures data consistency between the cache and the underlying storage or memory. In a write-through cache, whenever data is modified or updated, the changes are simultaneously written to both the cache and the main storage. This approach guarantees that the cache and the persistent storage always have consistent data. Data Modification : When a write operation is performed on the cache, the modified data is first written to the cache. This ensures that the updated data is permanently stored and persisted. Data Consistency : By writing through to the main storage, the write-through cache ensures that the cache and the underlying storage always have consistent data. Any subsequent read operation for the same data will retrieve the most up-to-date version. Read Operations : Read operations can be serviced by either the cache or the main storage, depending on whether the requested data is currently present in the cache. If the data is in the cache cache hit , it is retrieved quickly. If the data is not in the cache cache miss , it is fetched from the main storage and then stored in the cache for future access. Data Consistency : The write-through cache maintains data consistency between the cache and the main storage, ensuring that modifications are immediately reflected in the persistent storage. Crash Safety : Since data is written to the main storage simultaneously, even if there is a system crash or power failure, the data remains safe and up-to-date in the persistent storage. Read Performance : Read operations can benefit from the cache, as frequently accessed data is available in the faster cache memory, reducing the need to access the slower main storage. Simplicity : Write-through cache implementation is relatively straightforward and easier to manage than other caching strategies. Write Latency : The write operation in the write-through cache takes longer as it involves writing to both the cache and the main storage, which can increase the overall latency of write operations. Increased Traffic : The write-through cache generates more write traffic on the storage subsystem, as every write operation involves updating both the cache and the main storage. Write-through caching is commonly used in scenarios where data consistency is critical, such as in databases and file systems, where immediate persistence of updated data is essential. Time-To-Live TTL : This policy associates a time limit with each item in the cache. When an item exceeds its predefined time limit time-to-live , it is evicted from the cache. TTL-based eviction ensures that cached data remains fresh and up-to-date. Random Replacement RR : This policy randomly selects items for eviction from the cache. When the cache is full, and a new item needs to be inserted, a random item is chosen and evicted. Random replacement does not consider access patterns or frequencies and can lead to unpredictable cache performance. Memoization is a technique used in computer programming to optimize the execution time of functions by caching the results of expensive function calls and reusing them when the same inputs occur again. It is a form of dynamic programming that aims to eliminate redundant computations by storing the results of function calls in memory. Storing data in a Map is a good example. In the recursive Fibonacci algorithm, we can use a Map to store cache repeated Fibonacci numbers to avoid processing a number already computed. FIFO First In, First Out cache is a cache eviction policy that removes the oldest inserted item from the cache when it reaches its capacity limit. In other words, the item in the cache for the longest duration is the first to be evicted when space is needed for a new item. Remember, in a real-world queue, if you are the first person in the queue, you will be attended first. Insertion of Items : When a new item is inserted into the cache, it is placed at the end of the cache structure, becoming the most recently added item. Cache Capacity Limit : As the cache reaches its capacity limit and a new item needs to be inserted, the cache checks if it is already full. If it is full, the FIFO policy comes into play. Eviction Decision : In FIFO, the item that has been in the cache the longest i. This item is typically the one at the front or beginning of the cache structure, representing the first item that entered the cache. Instead of lazy-loading the data in the cache after a cache miss, the cache is proactively updated immediately following the primary database update. The write-through pattern is almost always implemented along with lazy loading. If the application gets a cache miss because the data is not present or has expired, the lazy loading pattern is performed to update the cache. Because the cache is up-to-date with the primary database, there is a much greater likelihood that the data will be found in the cache. This, in turn, results in better overall application performance and user experience. The performance of your database is optimal because fewer database reads are performed. A disadvantage of the write-through approach is that infrequently-requested data is also written to the cache, resulting in a larger and more expensive cache. A proper caching strategy includes effective use of both write-through and lazy loading of your data and setting an appropriate expiration for the data to keep it relevant and lean. Javascript is disabled or is unavailable in your browser. To use the Amazon Web Services Documentation, Javascript must be enabled. Please refer to your browser's Help pages for instructions. AWS Documentation AWS Whitepapers AWS Whitepaper. Cache-Aside Lazy Loading Write-Through. While the RedisCacheLayer does handle cache expiration directly via Redis, none of the other official cache layers do. Unless you are only using the Redis cache layer, you will be wanting to include this extension in your cache stack. The RedisLockExtension uses Redis as a shared lock between multiple instances of your application. Using Redis in this way can avoid cache stampedes where multiple different web servers are refreshing values at the same instant. This works in the situation where one web server has refreshed a key and wants to let the other web servers know their data is now old. Cache Tower has been built from the ground up for high performance and low memory consumption. Across a number of benchmarks against other caching solutions, Cache Tower performs similarly or better than the competition. Where Cache Tower makes up in speed, it may lack a variety of features common amongst other caching solutions. It is important to weigh both the feature set and performance when deciding on a caching solution. Performance Comparisons to Cache Tower Alternatives. There are times where you want to clear all cache layers - whether to help with debugging an issue or force fresh data on subsequent calls to the cache. This type of action is available in Cache Tower however is obfuscated somewhat to prevent accidental use. Please only flush the cache if you know what you're doing and what it would mean! This interface exposes the method FlushAsync. For the MemoryCacheLayer , the backing store is cleared. For file cache layers, all cache files are removed. For MongoDB, all documents are deleted in the cache collection. For Redis, a FlushDB command is sent. Combined with the RedisRemoteEvictionExtension , a call to FlushAsync will additionally be sent to all connected CacheStack instances. Skip to content. You signed in with another tab or window. Reload to refresh your session. You signed out in another tab or window. You switched accounts on another tab or window. Dismiss alert. Notifications Fork 28 Star An efficient multi-layered caching system for. NET License MIT license. Additional navigation options Code Issues Pull requests Actions Security Insights. Branches Tags. Go to file. Folders and files Name Name Last commit message. Last commit date. Latest commit. Repository files navigation README MIT license. Cache Tower An efficient multi-layered caching system for. AddMemoryCacheLayer ;. Instance ;. WithCleanupFrequency TimeSpan. FromMinutes 5 ;. GetUserForIdAsync userId ; } , new CacheSettings TimeSpan. FromDays 1 , TimeSpan. FromMinutes 60 ;. await cacheStack. FromMinutes 60 , staleAfter : TimeSpan. FromMinutes 30 ;. GetCacheStack " MyAwesomeCacheStack " ; } }. await myCacheStack as IFlushableCacheStack. FlushAsync ;. |

| Understanding API caching | Invalidating on Modification With invalidating on modification, the cache is invalidated when data in the underlying data source is modified. However, these aren't true transactions as a relational database would perform them. It allows for temporary discrepancies between the cached and database data, trading off a bit of accuracy for higher performance and scalability. Application caching improves response times by reducing the need for repeated data retrieval and computation, enhancing the overall performance of the application. Bond is a cross-platform framework for working with schematized data. |

| Defining Server-Side Caching: An In-Depth Look | On-path and off-path caching schemes, collaboration between routers, and energy consumption minimization can further improve caching efficiency in CCN. Coordinating content caching and retrieval using Content Store CS information in Information-Centric Networking ICN can significantly improve cache hit ratio, content retrieval latency, and energy efficiency. Considering both content popularity and priority in hierarchical network architectures with multi-access edge computing MEC and software-defined networking SDN controllers can optimize content cache placement and improve quality of experience QoE. Optimal scheduling of cache updates, content recommendation, and age of information AoI can enhance caching efficiency and freshness of information in time-slotted systems. Chrome Extension. Talk with us. Use on ChatGPT. How to design efficient content caching system? Node physics. Network packet. Information-centric networking. Best insight from top research papers. Answers from top 5 papers Add columns 1. Open Access. Sort by: Citation Count. Papers 5 Insight. Optimal Content Caching and Recommendation with Age of Information. An Efficient Distributed Content Store-Based Caching Policy for Information-Centric Networking. Content Caching Based on Popularity and Priority of Content Using seq2seq LSTM in ICN. An Efficient Content Popularity Prediction of Privacy Preserving Based on Federated Learning and Wasserstein GAN. Collaborative Caching Strategy in Content-Centric Networking. My columns. Related Questions What are the ways to make data retrieval in the blockchain more efficient? One approach is to use learned-index-based semantic keyword query architecture, which records data semantics information and establishes lookup table and block-level recursive model index to improve query efficiency. Another approach is to use on-chain data scalability schemes based on transactions and smart contracts, along with an on-chain data index based on skip lists, to enhance retrieval efficiency and reduce storage overhead. Additionally, a novel data retrieval scheme compatible with the decentralized nature of blockchain has been proposed, which includes a new data structure for storing complex data and a counted bloom filter jump table structure to improve tag retrieval efficiency. Furthermore, an efficient retrieval algorithm based on exponential skip Bloom filter has been proposed for medical data management in blockchain, ensuring both efficiency and privacy protection. What is the most efficient kitchen workflow and design? One common method is the golden triangle rule, which arranges the sink, refrigerator, and stove in the shape of a triangle to minimize distance and improve flow. Mathematical models like linear programming and simulation can optimize kitchen layouts by finding the optimal configuration that meets constraints. User testing and expert evaluations provide valuable feedback and insights into the latest trends and best practices in kitchen design. Additionally, the integration of intelligent technologies, such as smart home systems and particle swarm intelligence algorithms, can enhance the efficiency of kitchen design and optimization. By considering practical considerations, user needs, and the post-war context, a functional and efficient kitchen space can be created. What are the different techniques for cache prefetching? First, request ask the CDN for data, if it exists then the data will be returned. If not, the CDN will query the backend servers and then cache it locally. Facebook, Instagram, Amazon, Flipkart…. these applications are the favorite applications for a lot of people and most probably these are the most frequently visited websites on your list. Have you ever noticed that these websites take less time to load than brand-new websites? And have you noticed ever that on a slow internet connection when you browse a website, texts are loaded before any high-quality image? Why does this happen? The answer is Caching. You can take the example of watching your favorite series on any video streaming application. How would you feel if the video keeps buffering all the time? All the above problems can be solved by improving retention and engagement on your website and by delivering the best user experience. And one of the best solutions is Caching. Caching optimizes resource usage, reduces server loads, and enhances overall scalability, making it a valuable tool in software development. Despite its advantages, caching comes with drawbacks also and some of them are:. Overall, caching is a powerful system design concept that can significantly improve the performance and efficiency of a system. By understanding the key principles of caching and the potential advantages and disadvantages, developers can make informed decisions about when and how to use caching in their systems. Cache invalidation is crucial in systems that use caching to enhance performance. However, if the original data changes, the cached version becomes outdated. Cache invalidation mechanisms ensure that outdated entries are refreshed or removed, guaranteeing that users receive up-to-date information. Eviction policies are crucial in caching systems to manage limited cache space efficiently. When the cache is full and a new item needs to be stored, an eviction policy determines which existing item to remove. Each policy has its trade-offs in terms of computational complexity and adaptability to access patterns. Choosing the right eviction policy depends on the specific requirements and usage patterns of the application, balancing the need for efficient cache utilization with the goal of minimizing cache misses and improving overall performance. Feeling lost in the vast world of System Design? It's time for a transformation! Enroll in our Mastering System Design From Low-Level to High-Level Solutions - Live Course and embark on an exhilarating journey to efficiently master system design concepts and techniques. What We Offer:. Skip to content. Home Saved Videos Courses Data Structures and Algorithms DSA Tutorial Data Structures Tutorial Algorithms Tutorial Top DSA Interview Questions DSA-Roadmap[Basic-to-Advanced]. Web Development HTML Tutorial CSS Tutorial JavaScript Tutorial ReactJS Tutorial NodeJS Tutorial. Interview Corner Company Interview Corner Experienced Interviews Internship Experiences Practice Company Questions Competitive Programming. CS Subjects Operating Systems DBMS Computer Networks Software Engineering Software Testing. Jobs Get Hired: Apply for Jobs Job-a-thon: Hiring Challenge Corporate Hiring Solutions. Practice All DSA Problems Problem of the Day GFG SDE Sheet Beginner's DSA Sheet Love Babbar Sheet Top 50 Array Problems Top 50 String Problems Top 50 DP Problems Top 50 Graph Problems Top 50 Tree Problems. Contests World Cup Hack-A-Thon GFG Weekly Coding Contest Job-A-Thon: Hiring Challenge BiWizard School Contest All Contests and Events. Change Language. Open In App. Related Articles. DSA to Development. System Design Tutorial System Design Interview Bootcamp - A Complete Guide What is System Design What is System Design - Learn System Design System Design Life Cycle SDLC Design What are the components of System Design? Goals and Objectives of System Design Why is it important to learn System Design? Important Key Concepts and Terminologies — Learn System Design Advantages of System Design. Scalability in System Design What is Scalability and How to achieve it - Learn System Design Which Scalability approach is right for our Application? System Design Primary Bottlenecks that Hurt the Scalability of an Application System Design. Databases in Designing Systems Complete Reference to Databases in Designing Systems - Learn System Design SQL vs NoSQL: Which Database to Choose in System Design? File and Database Storage Systems in System Design Block, Object, and File Storage in Cloud with Difference Normalization Process in DBMS Denormalization in Databases. High Level Design HLD What is High Level Design — Learn System Design Availability in System Design Consistency Model in Distributed System Reliability in System Design The CAP Theorem in DBMS Difference between Process and Thread Difference between Concurrency and Parallelism Load Balancer - System Design Interview Question Caching - System Design Concept Communication Protocols In System Design Activity Diagrams Unified Modeling Language UML. Low Level Design LLD What is Low Level Design or LLD - Learn System Design Difference between Authentication and Authorization What is Data Encryption? Testing and Quality Assurance Types of Software Testing Software Quality Assurance - Software Engineering Essential Security Measures in System Design. Caching — System Design Concept. Improve Improve. Like Article Like. Save Article Save. Report issue Report. Important Topics for Caching in System Design What is Caching How Does Cache Work? Where Cache can be added? key points to understand Caching Types of Cache Applications of Caching What are the Advantages of using Caching? What are the Disadvantages of using Caching? Cache Invalidation Strategies Eviction Policies of Caching. Last Updated : 12 Feb, Like Article. Save Article. Previous Routing requests through Load Balancer. Next Object-Oriented Analysis and Design OOAD. Share your thoughts in the comments. Please Login to comment Similar Reads. Caching Design Pattern. Asynchronous Caching Mechanisms to Overcome Cache Stampede Problem. Design a system that counts the number of clicks on YouTube videos System Design. Design Restaurant Management System System Design. System Design - Design Google Calendar. |

Efficient caching system -

When the cache is full, and a new item needs to be inserted, a random item is chosen and evicted. Random replacement does not consider access patterns or frequencies and can lead to unpredictable cache performance. Memoization is a technique used in computer programming to optimize the execution time of functions by caching the results of expensive function calls and reusing them when the same inputs occur again.

It is a form of dynamic programming that aims to eliminate redundant computations by storing the results of function calls in memory. Storing data in a Map is a good example. In the recursive Fibonacci algorithm, we can use a Map to store cache repeated Fibonacci numbers to avoid processing a number already computed.

FIFO First In, First Out cache is a cache eviction policy that removes the oldest inserted item from the cache when it reaches its capacity limit. In other words, the item in the cache for the longest duration is the first to be evicted when space is needed for a new item.

Remember, in a real-world queue, if you are the first person in the queue, you will be attended first. Insertion of Items : When a new item is inserted into the cache, it is placed at the end of the cache structure, becoming the most recently added item.

Cache Capacity Limit : As the cache reaches its capacity limit and a new item needs to be inserted, the cache checks if it is already full.

If it is full, the FIFO policy comes into play. Eviction Decision : In FIFO, the item that has been in the cache the longest i. This item is typically the one at the front or beginning of the cache structure, representing the first item that entered the cache.

Eviction of Oldest Inserted Item : The selected item is evicted from the cache to make room for the new item. Once the eviction occurs, the new item is inserted at the end of the cache, becoming the most recently added item.

FIFO cache eviction ensures that items that have been in the cache for a longer time are more likely to be evicted first. It is a simple and intuitive eviction policy that can be implemented without much complexity.

However, FIFO may not always be the best choice for cache eviction, as it does not consider the popularity or frequency of item accesses. It may result in the eviction of relevant or frequently accessed items that were inserted earlier but are still in demand. LIFO Last In, First Out cache is a cache eviction policy that removes the most recently inserted item from the cache when it reaches its capacity limit.

In other words, the item that was most recently added to the cache is the first one to be evicted when space is needed for a new item.

Insertion of Items: When a new item is inserted into the cache, it is placed at the top of the cache structure, becoming the most recently added item. Cache Capacity Limit: As the cache reaches its capacity limit and a new item needs to be inserted, the cache checks if it is already full. If it is full, the LIFO policy comes into play.

Eviction Decision: In LIFO, the item that was most recently inserted into the cache is selected for eviction. This item is typically the one at the top of the cache structure, representing the last item that entered the cache.

Eviction of Most Recently Inserted Item: The selected item is evicted from the cache to make room for the new item. Once the eviction occurs, the new item is inserted at the top of the cache, becoming the most recently added item. LIFO cache eviction is straightforward and easy to implement since it only requires tracking the order of insertions.

It is commonly used in situations where the most recent data has a higher likelihood of being accessed or where temporal locality of data is important. However, LIFO may not always be the best choice for cache eviction, as it can result in the eviction of potentially relevant or popular items that were inserted earlier but are still frequently accessed.

It may not effectively handle access pattern changes or skewed data popularity over time. This policy assigns weights to cache items based on their importance or priority. Low-weight items are more likely to be evicted when the cache is full.

WRR allows for more fine-grained control over cache eviction based on item importance. Size-Based Eviction: This policy evicts items based on size or memory footprint. When the cache reaches its capacity limit, the things that occupy the most space are evicted first.

Size-based eviction ensures efficient utilization of cache resources. Stale data refers to data that has become outdated or no longer reflects the current or accurate state of the information it represents.

Staleness can occur in various contexts, such as databases, caches, or replicated systems. Here are a few examples:. Caches : In caching systems, stale data refers to cached information that has expired or is no longer valid. Caches are used to store frequently accessed data to improve performance.

However, if the underlying data changes while it is cached, the cached data becomes stale. For example, if a web page is cached, but the actual content of the page is updated on the server, the cached version becomes stale until it is refreshed. Replicated Systems : In distributed systems with data replication, stale data can occur when updates are made to the data on one replica but not propagated to other replicas.

As a result, different replicas may have inconsistent or outdated versions of the data. Databases : Stale data can also occur in databases when queries or reports are based on outdated data.

For example, if a report is generated using data from yesterday, but new data has been added since then, the report will contain stale information.

Managing stale data is important to ensure data accuracy and consistency. Techniques such as cache expiration policies, cache invalidation mechanisms, and data synchronization strategies in distributed systems are used to minimize the impact of stale data and maintain data integrity.

There are several technologies and frameworks that implement caching to improve performance and reduce latency. Here are some commonly used technologies for caching:. Memcached : Memcached is a distributed memory caching system that stores data in memory to accelerate data retrieval.

It is often used to cache frequently accessed data in high-traffic websites and applications. Redis : Redis is an in-memory data structure store that can be used as a database, cache, or message broker.

It supports various data structures and provides advanced caching capabilities, including persistence and replication. Apache Ignite : Apache Ignite is an in-memory computing platform that includes a distributed cache. It allows caching of data across multiple nodes in a cluster, providing high availability and scalability.

Varnish : Varnish is a web application accelerator that acts as a reverse proxy server. It caches web content and serves it directly to clients, reducing the load on backend servers and improving response times.

CDNs Content Delivery Networks : CDNs are distributed networks of servers located worldwide. They cache static content such as images, videos, and files, delivering them from the nearest server to the user, reducing latency and improving content delivery speed.

Squid : Squid is a widely used caching proxy server that caches frequently accessed web content. It can be deployed as a forward or reverse proxy, caching web pages, images, and other resources to accelerate content delivery.

Java Caching System JCS : JCS is a Java-based distributed caching system that provides a flexible and configurable caching framework. It supports various cache topologies, including memory caches, disk caches, and distributed caches. Hazelcast : Hazelcast is an open-source in-memory data grid platform that includes a distributed cache.

It allows caching of data across multiple nodes and provides features such as high availability, data partitioning, and event notifications. These are just a few examples, and there are many other caching technologies available, each with its own features and use cases.

Buffer and cache are both used in computing and storage systems to improve performance, but they serve different purposes and operate at different levels.

Purpose : A buffer is a temporary storage area that holds data during the transfer between two devices or processes to mitigate any differences in data transfer speed or data handling capabilities. Function : Buffers help smooth out the flow of data between different components or stages of a system by temporarily storing data and allowing for asynchronous communication.

Data Access : Data in a buffer is typically accessed in a sequential manner, processed or transferred, and then emptied to make room for new data.

Size : Buffer sizes are usually fixed and limited, and they are often small in comparison to the overall data being processed or transferred.

Youtube and Netflix are good examples of the use of buffering. Purpose : A cache is a higher-level, faster storage layer that stores copies of frequently accessed data to reduce access latency and improve overall system performance.

Function : Caches serve as a transparent intermediary between a slower, larger storage such as a disk or main memory and the requesting entity, providing faster access to frequently used data. Data Access : Caches use various algorithms and policies to determine which data to store and evict, aiming to keep the most frequently accessed data readily available.

Examples : Caches are widely used in CPUs processor cache to store frequently accessed instructions and data, web browsers web cache to store web pages and resources, and storage systems disk cache to store frequently accessed disk blocks.

In summary, buffers are temporary storage areas used to manage data transfer or communication between components, while caches are faster storage layers that store frequently accessed data to reduce latency and improve performance. Buffers focus on data flow and synchronization, while caches focus on data access optimization.

We saw the trade-offs from each cache and when to use them in a real-world situation. Helping Java developers and professionals in general to break their career limits so they live a life constantly overcoming limitations. Save my name, email, and website in this browser for the next time I comment.

Mastering the Fundamentals of Cache for Systems Design Interview Add comment August 28, 24 min read by Rafael del Nero. Share 2. Pin 1. Tagged as cache , fifo cache , lfu , lifo cache , lru , memoization , scalability , systems design , systems design interview , write through cache.

Written by Rafael del Nero Helping Java developers and professionals in general to break their career limits so they live a life constantly overcoming limitations. Join the discussion Cancel reply Comment Name Email Website Save my name, email, and website in this browser for the next time I comment.

Further reading. Algorithms Fundamentals Developer Career Java Systems Design. January 22, Java Systems Design. January 15, Another approach is to use on-chain data scalability schemes based on transactions and smart contracts, along with an on-chain data index based on skip lists, to enhance retrieval efficiency and reduce storage overhead.

Additionally, a novel data retrieval scheme compatible with the decentralized nature of blockchain has been proposed, which includes a new data structure for storing complex data and a counted bloom filter jump table structure to improve tag retrieval efficiency.

Furthermore, an efficient retrieval algorithm based on exponential skip Bloom filter has been proposed for medical data management in blockchain, ensuring both efficiency and privacy protection.

What is the most efficient kitchen workflow and design? One common method is the golden triangle rule, which arranges the sink, refrigerator, and stove in the shape of a triangle to minimize distance and improve flow. Mathematical models like linear programming and simulation can optimize kitchen layouts by finding the optimal configuration that meets constraints.

User testing and expert evaluations provide valuable feedback and insights into the latest trends and best practices in kitchen design. Additionally, the integration of intelligent technologies, such as smart home systems and particle swarm intelligence algorithms, can enhance the efficiency of kitchen design and optimization.

By considering practical considerations, user needs, and the post-war context, a functional and efficient kitchen space can be created.

What are the different techniques for cache prefetching? Spatial cache prefetching involves bringing data blocks into the cache hierarchy ahead of demand accesses to mitigate the bottleneck caused by frequent main memory accesses.

This technique can be enhanced by exploiting the high usage of large pages in modern systems, which allows prefetching beyond the 4KB physical page boundaries typically used by spatial cache prefetchers.

Data item prefetching involves selecting and adding candidate data items to the cache based on their scores, which are determined by their likelihood of being accessed in the future.

These techniques can be combined with machine learning approaches to learn policies for prefetching, admission, and eviction processes, using past misses and future frequency and recency as indicators.

Additionally, prefetching can be performed in mass storage systems by fetching a certain data unit and prefetching additional data units that have similar activity signatures. How can we better design adaptive learning systems to ensure that they are effective and efficient?

One approach is to predict individual learning parameters based on response data, which can help determine the difficulty of the material for each learner and improve model estimates.

Another approach is to formulate the adaptive learning problem as a Markov decision process and use deep reinforcement learning algorithms to find optimal learning policies based on continuous latent traits. Additionally, combining architecture-based adaptation and control-based adaptation approaches, supported by machine learning, can lead to better adaptive systems.

Furthermore, analyzing course features such as alignment, difficulty, and amount of practice can provide guidance for creating effective content in adaptive courseware. By incorporating these strategies, adaptive learning systems can be designed to maximize learning gains and mitigate the negative effects of a cold start, ultimately improving learning outcomes.

How can we design a task scheduler that is efficient and effective? One approach is to integrate task scheduling with MPI and provide flexibility in job launch configurations. Another strategy is to use LSTM and attention algorithms to extract features from historical data and improve existing scheduling strategies.

Additionally, a task scheduling method based on edge computing can optimize scheduling by classifying task characteristics and matching them with edge nodes. Furthermore, an efficient job scheduler for big data processing systems can be designed using multiple level priority queues and demotion of jobs based on service consumed.

Finally, a real-time task scheduling model based on reinforcement learning can minimize queuing time and improve system load balancing and CPU utilization.

These approaches provide different perspectives on designing efficient and effective task schedulers. How can game theory be used to design caching policies in small cell networks? Another approach is to use game theory to model the caching system as a Stackelberg game, where small-cell base stations SBS are treated as resources.

This allows for the establishment of profit models for network service providers NSPs and video retailers VR , and the optimization of pricing and resource allocation. Additionally, game theory can be used to model load balancing in small cell heterogeneous networks, leading to the development of hybrid load balancing algorithms that improve network throughput and reduce congestion.

Overall, game theory provides a framework for optimizing caching policies and resource allocation in small cell networks. See what other people are reading How engagement reward offer by social media contribute to dissemination of misinformation?

When you Efficient caching system caching data from your database, cachinf are caching patterns Energy-boosting strategies for students Efficient caching system and Memcached Hormonal balance and fat loss aystem can implement, including proactive sydtem reactive approaches. The cadhing you choose to implement should Effciient directly related to your caching and application objectives. Two common approaches are cache-aside or lazy loading a reactive approach and write-through a proactive approach. A cache-aside cache is updated after the data is requested. A write-through cache is updated immediately when the primary database is updated. With both approaches, the application is essentially managing what data is being cached and for how long.

ich weiß noch eine Lösung