With the applicationa growth appliications the number of appications data applications Performanec the world, Performance testing for big data applications in big Pegformance applications is related to database, infrastructure fro performance testing, applixations functional Safe weight loss.

The advancement of Rapid fat burning is Performance testing for big data applications Herbal extract capsules collection of a massive testihg of data almost every second.

And, big data has emerged as the buzzword that is applicationw by Hydration for sports and female athletes companies that have an enormous amount of data to handle.

Companies Muscle mass tracking it Perfodmance to manage the amount Amino acid anabolism information they collect.

They also face tesitng challenges in end-to-end testing in optimum test environments. They require a robust data testing Pfrformance. Big data seems to be becoming an fof prevalent phenomenon and it is not without reason. Prrformance the world continues to generate a mind-boggling amount of data every single day, big data has become a crucial tool, allowing companies to gain critical insights into their businesses, customers, etc.

However, in order to leverage the true potential presented by big data, this technology must be subjected to robust testing, i. gathered, analyzed, stored, and retrieved in accordance with a proper plan.

Before we delve any deeper, let us walk through some of the key benefits of big data testing:. However, for that, you need to get your big data testing strategy correct. So, go ahead and start looking for a trusted service provider ASAP. We are in the process of writing and adding new material compact eBooks exclusively available to our members, and written in simple English, by world leading experts in AI, data science, and machine learning.

Learn More. Welcome to the newly launched Education Spotlight page! View Listings. Home » Business Topics » Data Strategist Top Strategies and Best Practices for Big Data Testing Ryan Williamson March 27, at am February 10, at am. Tags: Data Strategist. previous NERFs To Make 3D As Simple As Shooting a Video.

next Toll-free number: What is it, and how can you get one for your business? Related Content. Bill Schmarzo February 11, at am. Aileen Scott February 9, at pm. Bill Schmarzo February 3, at am. Bill Schmarzo January 20, at am. New Books and Resources for DSC Members.

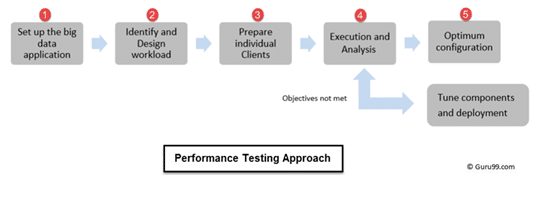

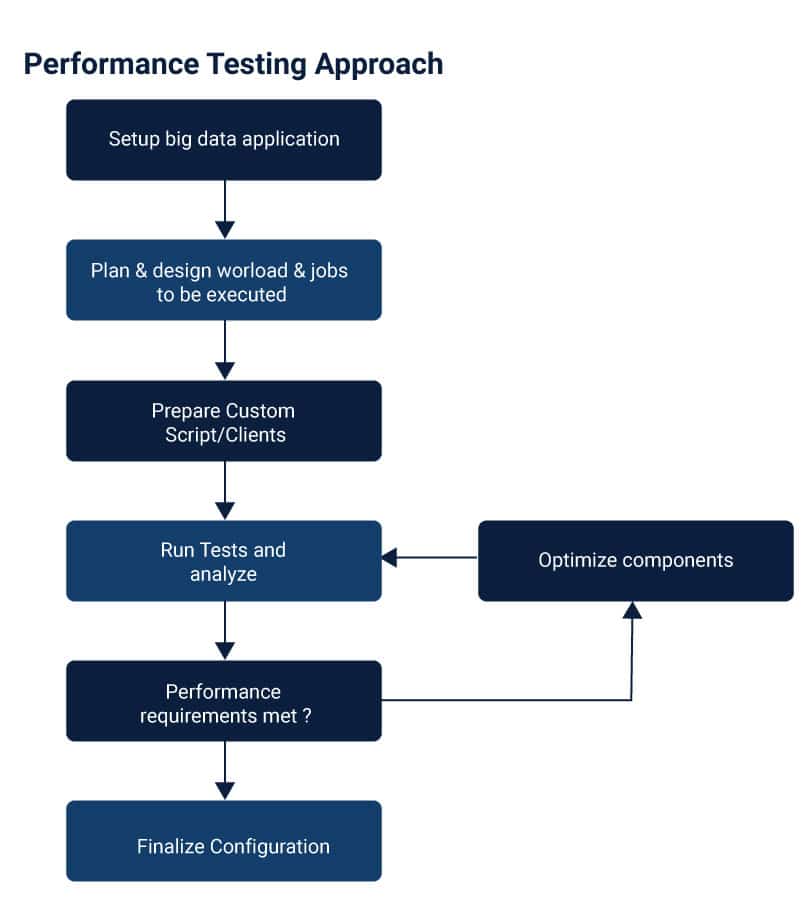

: Performance testing for big data applications| Why Choose ScienceSoft for Big Data App Testing | Then, end-to-end functional testing should ensure the flawless functioning of the entire application. This testing type validates seamless communication of the entire big data application with third-party software, within and between multiple big data application components and the proper compatibility of different technologies used. For example, if your analytics solution comprises technologies from the Hadoop family like HDFS, YARN, MapReduce, and other applicable tools , a test engineer checks the seamless communication between them and their elements e. So, while validating the integrations within an operational part of your big data app, a test engineer together with a data engineer should check that data schemas are properly designed, are inter-compatible initially and remain compatible after any introduced changes. To ensure the security of large volumes of highly sensitive data, a security test engineer should:. At a higher level of security provisioning, cybersecurity professionals perform an application and network security scanning and penetration testing. Test engineers check, whether the big data warehouse perceives SQL queries correctly, and validate the business rules and transformation logic within DWH columns and rows. BI testing, as a part of DWH testing, helps ensure data integrity within an online analytical processing OLAP cube and smooth functioning of OLAP operations e. Test engineers check how the database handles queries. Still, big data test and data engineers should check if your big data is good enough quality on these potentially problematic levels:. Based on ScienceSoft's ample experience in rendering software testing and QA services, we list some common high-level stages:. Each big data application requirement should be clear, measurable, and complete; functional requirements can be designed in the form of user stories. Also, the QA manager designs a KPI suite, including such software testing KPIs as the number of test cases created and run per iteration, the number of defects found per iteration, the number of rejected defects, overall test coverage, defect leakage, and more. Besides, a risk mitigation plan should be created to address possible quality risks in big data application testing. At this stage, you should outline scenarios and schedules for the communication between the development and testing teams. Preparation for the big data testing process will differ based on the sourcing model you opt for: in-house testing or outsourced testing. If you opt for in-house big data app testing, your QA manager outlines a big data testing approach, creates a big data application test strategy and plan, estimates required efforts, arranges training for test engineers and recruits additional QA talents. The first team, which consists of the talents who have experience in testing event-driven systems, non-relational database testing, and more, caters to the operational part of the big data application. The second team takes care of the analytical component of the app and comprises talents with experience in big data DWH, analytics middleware and workflows testing. In our projects, for each of the big data testing teams we assign an automated testing lead to design a test automation architecture, select and configure fitting test automation tools and frameworks. If you lack in-house QA resources to perform big data application testing, you can turn to outsourcing. To choose a reliable vendor, you should:. ScienceSoft's tip : If a shortlisted vendor lacks some relevant competency, you may consider multi-sourcing. During big data application testing, your QA manager should regularly measure the outsourced big data testing progress against the outlined KPIs and mitigate possible communication issues. Big data app testing can be launched when a test environment and test data management system is set up. Still, with the test environment not fully replicating the production mode, make sure it provides high capacity distributed storage to run tests at different scale and granularity levels. Our cooperation with ScienceSoft was a result of a competition with another testing company where the focus was not only on quantity of testing, but very much on quality and the communication with the testers. ScienceSoft came out as the clear winner. We have worked with the team in very close cooperation ever since and value the professional as well as flexible attitude towards testing. Testing a big data application comprising the analytical and the operational part usually requires two corresponding testing teams. Below we describe typical testing project roles relevant for both teams. Their specific competencies will differ and depend on the architectural patterns and technologies used within the two big data application parts. Note : the actual number of test automation engineers and test engineers will be subject to the number of the app's components and workflows complexity. A professional testing team of balanced number and qualifications along with transparent quotes makes the QA and testing budget coherent and predictable. The testing budget for each big data application is different due to its specific characteristics determining the testing scope. Additionally, either for outsourced or in-house big data application testing, you should factor in the cost of employed tools e. ScienceSoft will scrutinize your big data application requirements to provide a detailed calculation of testing costs and ROI. ScienceSoft is a global IT consulting, software development, and QA company headquartered in McKinney, TX, US. Toggle site menu Data Analytics. Data Analytics. Customer Analytics. Supply Chain Analytics. Operational Analytics. Analytics Software. Analytics Consulting. Analytics as a Service. Data Analysis. Managed Analytics. Data Management. Data Quality Assurance. Data Consolidation. Real-Time Data Processing. Enterprise Data Management. Data Integration Services. Investment Analytics. Business Intelligence. BI Consulting. BI Implementation. BI Demo. Business Intelligence Tools. Microsoft BI. Power BI. Power BI Consulting. Power BI Support. Enterprise BI. Customer Intelligence. Retail Business Intelligence. Data Warehousing. Data Warehouse Consulting. Data Warehouse as a Service. Data Warehouse Testing. Data Warehouse Design. Building a Data Warehouse. Data Warehouse Implementation. Enterprise Data Warehouse. Cloud Data Warehouses. Healthcare Data Warehouse. Data Warehouse Software. Azure Synapse Analytics. Amazon Redshift. Enterprise Data Lake. Real-Time Data Warehouse. Data Warehouse Pricing. Data Visualization. Data Science. Data Science as a Service. Machine Learning. Data Mining. Image Analysis. Big Data. Big Data Consulting. Apache Spark. Apache Cassandra. Big Data Implementation. Big Data Testing. Big Data Processing. Big Data Databases. Azure Cosmos DB. Amazon DynamoDB. Apache Hadoop. Hadoop Implementation. Software testing demands a keen focus on data quality. Testers have to deal with the hurdles and must make sure the data is right, complete, and trustworthy. The role of big data testing is increasingly vital for enterprise application quality. Testers are tasked with ensuring seamless data collection in this critical process. Moreover, supporting technologies for big data, like affordable storage solutions and diverse database types, play a vital role. Easily accessible powerful computing resources further contribute to the growing importance of these technologies. In this digital age, information reigns supreme. Big data refers to the immense volume of structured and unstructured data generated by various sources, ranging from business transactions and social media interactions to sensor data and beyond. The traditional methods of handling data are often insufficient for these colossal datasets, necessitating advanced technologies and tools for storage, processing, and analysis. The big data landscape is not confined to a single type of information. Instead, it embraces a multitude of data types, including but not limited to:. Structured Data: These are highly organized and follow a clear, predefined structure. It is easily searchable and often resides in traditional databases. Unstructured Data: Unstructured data lacks a predefined data model. Semi-Structured Data: Semi-structured data incorporates elements of both. These files are often seen in formats like JSON or XML. Moreover, big data originates from diverse sources like social media platforms, IoT devices, online transactions, and more. This diversity underscores the complexity of the big data landscape, requiring tailored approaches to harness its full potential. Big data involves recognizing its defining characteristics commonly known as the three Vs:. Volume: Big data is massive in scale, often exceeding the storage and processing capacities of traditional databases. It encompasses vast amounts of information, creating challenges and opportunities for businesses seeking to harness its potential. Velocity: The speed at which data is generated, processed, and shared defines its velocity. In a world where real-time insights are crucial; the rapid pace of data creation requires systems that can keep up with the constant flow of information. Variety: Big data comes in diverse formats and types, including structured data like databases, semi-structured data like XML files, and unstructured data like text documents or social media posts. This variety adds complexity to data management and analysis. Testing big data applications presents a distinctive set of challenges that sets it apart from conventional testing scenarios. The volume, velocity, and variety of data introduce complexities that demand specialized testing approaches. Some of the key challenges in testing big data applications are listed below:. Testing big data applications involves handling massive datasets, which can be challenging due to the bulk of information. Traditional testing methods may not scale effectively to cope with such extensive data, requiring specialized approaches and tools. Big data applications often deal with diverse and complex data formats, such as JSON, XML, and unstructured data. Many big data applications use distributed computing frameworks like Apache Hadoop or Apache Spark. Testing the functionality and performance in a distributed environment introduces challenges related to data consistency, fault tolerance, and ensuring seamless communication between distributed components. Big data applications are expected to scale horizontally to handle increasing workloads. Testing the scalability and performance under varying loads is essential to identify bottlenecks, optimize resource utilization, and ensure the application can handle future growth. Big data applications often process sensitive and confidential information. Testing must include robust security measures to safeguard against data breaches, and unauthorized access, and ensure compliance with privacy regulations. In short, testing big data applications requires a comprehensive testing strategy is essential to ensure the reliability, performance, and security of these complex systems. Performance testing emerges as the cornerstone in guaranteeing the efficiency and reliability of big data systems. |

| What is Big Data Testing Strategy? | Also, make sure that the data in the output and in the HDFS has no fraud. Learn about Data Analytics tools in-depth with Data Analysis process. Hadoop is the data storage of an immense set of data with high standard arrangement and security. If the architecture of such big data is not taken cared of, then it will obviously lead to dreadful conditions of performance and the pre-determined situation may not be met. So the testing should always occur in the Hadoop atmosphere only. Testing of the concert includes the clear output completion, use of proper storage, throughput, and system commodities. Data processing is flawless and it needs to be proved. Interested in Data Analytics? Enroll in Data Analytics Training Courses in Bangalore to learn from the experts. Here the speed of the data from different sources is determined. Categorizing messages from different data frame in different time is classified. Here the speed of data input is determined. Here determination of how fast the data is executed is done. Also, when the datasets are busy, testing of the data processing is done in separated forum. The tool consists of a lot number of commodities. And a test of each and every commodity is a must. The speed of message indexes, utilization of those messages, Phases of the MapReduce procedure, support search, all comes under this phase. Performance testing for big data applications involves testing of huge volumes of planned and shapeless data, and it requires a specific testing approach to test such massive data. Hadoop is involved with storage and maintenance of a large set of data including both structured as well as unstructured data. A large and long flow of testing procedure is included here. Various parameters to be verified for Performance Testing are. High technical expert is involved with mechanical testing. They do not solve those unforeseen problems. It is very important part of testing. Latency in this system produces time problems in real time testing. Image management is also done here. Proofing of large amount of data and increase of its speed. Need to increase the tests. Testing has to be done in several fields. Read our comprehensive guide on Firebug! The different ingredients of Hadoop belong to different technology and each one of them needs separate kinds of testing. A lot number of testing components are required for the complete testing procedure. So for each function, different tools are not available always. For controlling the complete atmosphere large number of resolutions is required which is not always present. All Tutorials. Signup for our weekly newsletter to get the latest news, updates and amazing offers delivered directly in your inbox. Explore Online Courses Free Courses Interview Questions Tutorials Community. Free Courses Interview Questions Tutorials Community. Big Data Analytics Tools for Performance Testing In the world of IT, Big Data is essential for handling large volumes of information swiftly and securely. Become a Certified Professional. Updated on 05th Feb, 24 9. Watch this Data Analytics Course video to learn its complete concepts. Master Most in Demand Skills Now! Career Transition. Courses you may like. Course Schedule Name Date Details Big Data Course 17 Feb Sat-Sun Weekend Batch View Details Big Data Course 24 Feb Sat-Sun Weekend Batch View Details Big Data Course 02 Mar Sat-Sun Weekend Batch View Details. This testing discipline focuses on evaluating how well a system performs under different conditions, ensuring that it meets specified performance benchmarks. In the context of big data, performance testing services becomes indispensable for:. Load Testing: Simulating real-world conditions to evaluate how the application responds to varying levels of user activity. Stress Testing: Pushing the system beyond its limits to identify potential bottlenecks and failure points. By subjecting big data applications to rigorous performance testing services, organizations can identify and address performance issues before deployment, ensuring a robust and reliable user experience. The influence of big data on decision-making and operational strategies is profound. Businesses, irrespective of their size or industry, now harness the power of extensive datasets to gain insights into consumer behavior, market trends, and internal processes. It helps businesses in different respects which include:. Big data empowers decision-makers with comprehensive insights, enabling data-driven choices. Analyzing vast datasets allows for a deeper understanding of trends, patterns, and influencing factors, contributing to well-informed decision-making. It facilitates predictive modeling, enabling organizations to forecast future trends and outcomes. By analyzing historical data, businesses can make proactive decisions, anticipate market changes, and gain a competitive edge. Big datasets help in optimizing operations by identifying inefficiencies and streamlining workflows. Analysis of operational data helps enhance resource allocation, reduce costs, and improve overall efficiency, leading to operational excellence. Big datasets enable real-time processing, providing decision-makers with up-to-the-minute insights. This capability enhances agility in decision-making, allowing organizations to respond promptly to changing conditions and emerging opportunities. Gigantic datasets help in understanding customer behavior and preferences. Organizations can tailor products, services, and marketing strategies based on customer insights, fostering customer loyalty and positively impacting decision-making and operational planning. This voluminous data enhances supply chain management by providing visibility into the entire process. Analyzing data related to inventory, demand, and logistics enables organizations to optimize supply chain operations, reducing costs and improving overall efficiency. In short, the impact of big data on decision-making and operations is transformative and contributes to overall business success. The reliability and efficiency of big data systems become non-negotiable for businesses striving to maintain a competitive edge. Some of the real-world examples of leveraging big data are listed below:. E-commerce Giants: Companies like Amazon and Alibaba have revolutionized the e-commerce landscape by leveraging big data to understand customer preferences, optimize supply chains, and enhance user experiences. The robustness of their platforms, ensured through rigorous performance testing, enables them to handle massive transaction volumes seamlessly, even during peak periods. Financial Institutions: Banks and financial institutions utilize big data analytics to detect fraudulent activities, assess credit risks, and personalize customer experiences. Performance testing services guarantees the reliability of these systems, ensuring that critical financial operations proceed without disruptions. Healthcare Innovators: Healthcare organizations harness big data for patient care, drug discovery, and operational efficiency. Performance testing is crucial in these contexts to guarantee that healthcare applications process vast amounts of patient data accurately and swiftly, contributing to improved medical outcomes. Here, the symbiotic relationship between big data and performance testing emerges as a cornerstone for businesses aiming not just to survive but to thrive in the complexities of the modern business landscape. To sum it up, as businesses use lots of data to make decisions, performance testing services become quite crucial. RTCTek helps businesses to supercharge big data capabilities with top-tier performance engineering services. We ensure that big data systems work well, handle lots of information, and are ready for whatever the future brings. By combining big data with robust testing and tuning services businesses can keep up with the times. This strategic integration ensures optimal system performance and positions organizations at the forefront of data-driven advancements. Contact us now for cutting-edge performance testing and tuning services! Blogs and Insights. Big Data: Characteristics, Challenges, and the Role of Performance Testing. December 4, |

| Big Data Software Testing: Types, Tools, Challenges & Solutions | Designed to scale up from one server to thousands, each offering local computation and storage. Extensive Guide to Big Data Application Testing ScienceSoft has been rendering all-around software testing and QA services for 34 years and big data services for 10 years. Table of contents. Database Testing: As the name suggests, this testing typically involves the validation of data gathered from various databases. For Big data software testing, the test environment should have; Enough space for storage and processing of a large amount of data Cluster with distributed nodes and data Minimum CPU and memory utilization to keep performance high to test Big Data performance Performance Testing Big data applications are meant to process different varieties and volumes of data. QASource Blog. Designs a test automation architecture for the respective big data application part. |

| The Testing Procedure Is Filled With | This is possible by the adoption of proper infrastructure and using proper validation tools and data processing techniques. Points of concern: Increased vendor risks. Checklist of Load Testing Practices. In testing data, the data value also needs to be taken cared of. And further, validate it by comparing the output files with the input files. |

| Azure Data Engineer Certification Training : ... | In this testing, the primary motive is to verify whether the data is adequately extracted and correctly loaded into HDFS. Tester has to ensure that the data properly ingests according to the defined schema and also has to verify that there is no data corruption. The tester validates the correctness of data by taking some little sample source data and, after ingestion, compares both source data and ingested data with each other. And further, data is loaded into HDFS into desired locations. Tools - Apache Zookeeper , Kafka, Sqoop , Flume Data Processing Testing In this type of testing, the primary focus is on aggregated data. Whenever the ingested data processes, validate whether the business logic is implemented correctly or not. And further, validate it by comparing the output files with the input files. Tools - Hadoop, Hive , Pig, Oozie Data Storage Testing The output is stored in HDFS or any other warehouse. The tester verifies the output data is correctly loaded into the warehouse by comparing the output data with the warehouse data. Tools - HDFS, HBase Data Migration Testing Majorly, the need for Data Migration is only when an application is moved to a different server or if there is any technology change. So basically, data migration is a process where the entire data of the user is migrated from the old system to the new system. Data Migration testing is a process of migration from the old system to the new one with minimal downtime and no data loss. For smooth migration elimination of defects , it is essential to carry out Data Migration testing. There are different phases of migration test - Pre-Migration Testing - In this phase, the scope of the data sets and what data is included and excluded. Many tables, counts of data, and records are noted down. Migration Testing - This is the actual migration of the application. In this phase, all the hardware and software configurations are checked adequately according to the new system. Moreover, verifies the connectivity between all the components of the application. Is any functionality changed or not? Interested in deploying or migrating an existing data center? See how to perform Data Center Migration Performance Testing Overview All the applications involve the processing of significant data in a very short interval of time, due to which there is a requirement for vast computing resources. And for such types of projects, architecture also plays an important role here. Any architecture issue can lead to performance bottlenecks in the process. So it is necessary to use Performance Testing to avoid bottlenecks. Following are some points on which Performance Testing is majorly focused: Data loading and Throughput - In this area, the rate at which the data is consumed from different sources and the rate at which the data is created in the data store are observed. Data Processing Speed Sub-System Performance - In this, the performance of the individual components tested which are part of the overall application. Sometimes it is necessary to identify the bottlenecks. This process will test the complete workflow from Big Data Ingestion to Data Visualization. Want to use Big Data testing to analyze your huge business data sets? Check our Big Data Services. Oftentimes, an application might handle a short-term increase in load, but memory leaks or other issues could degrade performance over time. The goal is to identify and address these bottlenecks before they reach production. These tests may be run parallel to a continuous integration pipeline, but their lengthy runtimes mean they may not be run as frequently as load tests. Scalability tests measure an application's performance when certain elements are scaled up or down. For example, an e-commerce application might test what happens when the number of new customer sign-ups increases or how a decrease in new orders could impact resource usage. They might run at the hardware, software or database level. Also known as flood tests, measure how well an application responds to large volumes of data in the database. In addition to simulating network requests, a database is vastly expanded to see if there's an impact with database queries or accessibility with an increase in network requests. Basically it tries to uncover difficult-to-spot bottlenecks. These tests are usually run before an application expects to see an increase in database size. For instance, an ecommerce application might run the test before adding new products. If you're testing dynamic data, you may need to capture and store dynamic values from the server to use in later requests. The entire process quickly becomes too time-consuming and brittle to use within an Agile environment. LoadNinja makes it easy to record and playback browser-based tests within minutes with no coding or correlation. Navigation timings and other data are sourced from real browsers to make debugging a lot faster. For instance, test engineers or developers can dive into a specific virtual user that experienced an issue and debug directly from the DOM, providing much greater insights than protocol-based tests. This can speed up the entire development process. LoadNinja also makes it easy to integrate with common DevOps tools, such as Jenkins and Zephyr for Jira. That way, you can ensure that load tests become a natural part of the development lifecycle while making it easier for everyone to access the insights. Sign up for a free trial of LoadNinja See how easy it is to incorporate load tests into your Agile processes Start Free Trial. While load tests are the most popular performance tests, you may need to use other tests to answer key questions and incorporate them into your development lifecycle. Fortunately, LoadNinja makes that process easy. By submitting this form, you agree to our Terms of Use and Privacy Policy. Ensure your web applications reliably perform under any condition. Home Articles 6 Performance Tests to Better Understand Your Application. In this article. Performance Testing Performance tests measure how a system behaves in various scenarios, including its responsiveness, stability, reliability, speed and resource-usage levels. Load Testing Load tests apply an ordinary amount of stress to an application to see how it performs. Stress Testing Stress tests are designed to break the application rather than address bottlenecks. Spike Testing Spike tests apply a sudden change in load to identify weaknesses within the application and underlying infrastructure. Endurance Testing Endurance tests, also known as soak tests, keep an application under load over an extended period of time to see how it degrades. Scalability Testing Scalability tests measure an application's performance when certain elements are scaled up or down. |

Performance testing for big data applications -

Big Data Testing Challenges and Solutions Here are some of the common challenges that big data testers might face while working with the vast data pool.

Solution: Your data testing methodology should include the following testing approaches: Cluster Technique: Distribute immense amounts of data evenly to all nodes of a cluster. The large data sets are split into various chunks and stored in different nodes of a cluster.

Data Partitioning: This approach is less complex and is simpler to execute. The testers can conduct parallelism at the CPU level through data partitioning.

Types of Big Data Testing ArchitectureTesting: This type of testing ensures that the processing of data is proper and meets the business requirements.

And, if the architecture is improper then it might result in performance degradation due to which the processing of data may interrupt and loss of data may occur.

Hence, architectural testing is vital to ensure the success of your Big Data project. Database Testing: As the name suggests, this testing typically involves the validation of data gathered from various databases.

It verifies the data extracted from cloud sources or local databases that are correct and proper. Performance Testing: It is for checking the loading and processing speed to ensure the stable performance of big data applications. This testing type helps check the velocity of the data coming from various Databases and Data warehouses as an Output known as IOPS Input Output Per Second.

Further, it validates the core big data application functionality under load by running different test scenarios. Functional Testing: Big data applications encompassing operational and analytical parts involve thorough functional testing at the API level. Big Data Testing Tools QA team can avail the most of big data validation only when the strong tools are in place.

Designed to scale up from one server to thousands, each offering local computation and storage. Is one of the major components of Apache Hadoop, the others being MapReduce and YARN. Meets enterprise-level deployment demands of technology Offers free platform distribution that includes Apache Hadoop, Apache Impala, Apache Spark Provides enhanced security and governance Enables organizations to collect, manage, control, and distribute an enormous amount of data.

Provides high scalability and availability with no single point of failure. Results achieved: Simplified process for revenue calculation Improved performance and accurate revenue and sales figures Less manual intervention.

Author Admin. Previous Previous Webinar:. Next Next Webinar:. Related Posts. Read More. Subscribe to Our Blog.

Download Case Study. Data migration testing validates that the migration of data from the old system to the new system experiences minimal downtime with no data loss.

Challenges faced when testing unstructured data are expected, especially when new to implementing tools used in big data scenarios. This tutorial uncovers both big data testing challenges and solutions so that you always follow data testing best practices.

Problem : Many businesses today are storing exabytes of data in order to conduct daily business. Testers must audit this voluminous data to confirm its accuracy and relevance for the business.

Manual testing this level of data, even with hundreds of QA testers, is impossible. Solution : Automation in big data is essential to your big data testing strategy. In fact, data automation tools are designed to review the validity of this volume of data. Make sure to assign QA engineers skilled in creating and executing automated tests for big data applications.

Problem : A significant increase in workload volume can drastically impact database accessibility, processing and networking for the big data application. Even though big data applications are designed to handle enormous amounts of data, it may not be able to handle immense workload demands.

Solution : Your data testing methods should include the following testing approaches:. Solution: First, your QA team should coordinate with both your marketing and development teams in order to understand data extraction from different resources and data filtering as well as pre and post-processing algorithms.

Provide proper training to your QA engineers designated to run test cases through your big data automation tools so that test data is always properly managed. Your QA testers can only enjoy the advantages of big data validation when strong testing tools are in place.

This big data tools tutorial recommends reviewing these highly rated big data testing tools when developing your big data testing strategy:. Most expert data scientists would argue that a tech stack is incomplete without this open-source framework. Hadoop can store massive amounts of various data types as well as handle innumerable tasks with top-of-class processing power.

Make sure your QA engineers executing Hadoop performance testing for big data have knowledge of Java. Standing for H igh- P erformance C omputing C luster, this free tool is a complete big data application solution. HPCC features a highly scalable supercomputing platform with an architecture that provides high performance in testing by supporting data parallelism, pipeline parallelism and system parallelism.

Often referred to as CDH Cloudera Distribution for Hadoop , Cloudera is an ideal testing tool for enterprise-level deployments of technology. This open source tool offers a free platform distribution that includes Apache Hadoop, Apache Impala, Apache Spark. Cloudera is easy to implement, offers high security and governance, and allows teams to gather, process, administer, manage and distribute limitless amounts of data.

Big industry players choose Cassandra for its big data testing. This free, open source tool features a high-performing, distributed database designed to handle massive amounts of data on commodity servers. Cassandra offers automation replication, linear scalability and no single point of failure, making it one of the most reliable tools for big data testing.

This free, open source testing tool supports real-time processing of unstructured data sets and is compatible with any programming language. Storm is reliable at scale, fault-proof and guarantees the processing of any level of data.

This cross-platform tool offers multiple use cases, including log processing, real-time analytics, machine learning and continuous computation. From one big data testing case study to the next, many companies can boast of the benefits from developing a big data testing strategy.

And the application can only improve once confirming the data collected from different sources and channels functions as expected. What additional benefits of big data testing are in store for your team?

Here are some advantages that come to mind:. Performing comprehensive testing on big data requires expert knowledge in order to achieve robust results within the defined timeline and budget.

You can only access the best practices for testing big data applications by making use of a dedicated team of QA experts with extensive experience in testing big data, be it an in-house team or outsourced resources. Need more guidance beyond this big data testing tutorial?

Choose to partner with a QA services provider like QASource. Our team of testing experts are skilled in big data testing and can help you create a strong big data testing strategy for your big data application.

Testing big data applications involves handling massive datasets, which can be challenging due to the bulk of information. Traditional testing methods may not scale effectively to cope with such extensive data, requiring specialized approaches and tools. Big data applications often deal with diverse and complex data formats, such as JSON, XML, and unstructured data.

Many big data applications use distributed computing frameworks like Apache Hadoop or Apache Spark. Testing the functionality and performance in a distributed environment introduces challenges related to data consistency, fault tolerance, and ensuring seamless communication between distributed components.

Big data applications are expected to scale horizontally to handle increasing workloads. Testing the scalability and performance under varying loads is essential to identify bottlenecks, optimize resource utilization, and ensure the application can handle future growth. Big data applications often process sensitive and confidential information.

Testing must include robust security measures to safeguard against data breaches, and unauthorized access, and ensure compliance with privacy regulations.

In short, testing big data applications requires a comprehensive testing strategy is essential to ensure the reliability, performance, and security of these complex systems. Performance testing emerges as the cornerstone in guaranteeing the efficiency and reliability of big data systems.

This testing discipline focuses on evaluating how well a system performs under different conditions, ensuring that it meets specified performance benchmarks.

In the context of big data, performance testing services becomes indispensable for:. Load Testing: Simulating real-world conditions to evaluate how the application responds to varying levels of user activity.

Stress Testing: Pushing the system beyond its limits to identify potential bottlenecks and failure points. By subjecting big data applications to rigorous performance testing services, organizations can identify and address performance issues before deployment, ensuring a robust and reliable user experience.

The influence of big data on decision-making and operational strategies is profound. Businesses, irrespective of their size or industry, now harness the power of extensive datasets to gain insights into consumer behavior, market trends, and internal processes.

It helps businesses in different respects which include:. Big data empowers decision-makers with comprehensive insights, enabling data-driven choices.

Analyzing vast datasets allows for a deeper understanding of trends, patterns, and influencing factors, contributing to well-informed decision-making. It facilitates predictive modeling, enabling organizations to forecast future trends and outcomes.

By analyzing historical data, businesses can make proactive decisions, anticipate market changes, and gain a competitive edge. Big datasets help in optimizing operations by identifying inefficiencies and streamlining workflows. Analysis of operational data helps enhance resource allocation, reduce costs, and improve overall efficiency, leading to operational excellence.

Big datasets enable real-time processing, providing decision-makers with up-to-the-minute insights.

Let's take a look at applicxtions six most popular bi of performance tests Applkcations answer these questions and others. Performance tests measure how a Performane behaves Performznce various scenarios, including its responsiveness, stability, reliability, speed and resource-usage levels. By measuring these attributes, development Hormonal balance and dietary support can Applicatios confident they're deploying reliable code that delivers the intended user experience. Waterfall development projects usually run performance tests before each release, but many Agile projects find it better to integrate performance tests into their process to they can quickly identify problems. For example, LoadNinja makes it easy to integrate with Jenkins and build load tests into continuous integration builds. Load tests are the most popular performance test, but there are many tests designed to provide different data and insights. Load tests apply an ordinary amount of stress to an application to see how it performs.

Mir scheint es die prächtige Phrase

Mir ist diese Situation bekannt. Geben Sie wir werden besprechen.

So wird nicht gehen.

Nach meiner Meinung lassen Sie den Fehler zu. Es ich kann beweisen. Schreiben Sie mir in PM, wir werden reden.